When it comes to marketing mix modeling (MMM), we aren’t shy about our viewpoint: We think you need an MMM that treats experimental data as ground truth.

To be blunt, we think traditional MMMs are built on bad data. And bad data leads to bad recommendations. Garbage in, garbage out. No wonder the marketers in our 2025 Industry Survey said their MMM was one of the least trusted solutions in their measurement stack. A recent report from BCG confirms this, finding that 68% of companies don’t consistently act on MMM results when allocating budget.

But it’s not enough to just say that traditional MMMs are built on flawed data — we want to actually explain why. So we spoke to some of the smartest folks around Haus about the importance of grounding your MMM in experimental data.

Additionally, we understand that this insistence on experimental data can sometimes be frustrating. What if you’re early in your experiment roadmap and don’t have a lot of experimental data to work with? Or maybe some of your channels aren’t testable — how can those inform and power your MMM?

Don’t worry — you have options. So let’s dive in.

Why is MMM data so bad?

Haus’ Principal Economist Phil Erickson doesn’t mince words: “MMM data is just terrible,” he says. And it’s bad for two crucial reasons:

- It’s full of statistical noise.

- And it’s complicated by multicollinearity.

Let’s tackle each of these problems one by one (without turning things into a Stat 101 lecture.)

MMM data is noisy

Data scientists often speak of “separating the signal from the noise.” The signal is the useful pattern or insight — the noise refers to those other variables that make it harder to “hear” the signal.

In the context of your business, “noise” refers to the many factors that affect your P&L. This noise can make the life of a growth marketer awfully…exciting. Yep. That’s one word for it. Our Measurement Strategy Lead, Chandler Dutton, hearkens back to his time leading growth at Magic Spoon as an example of macro instability leading to noisy data.

Launched in 2019, Magic Spoon established a strong influencer presence early on that helped it take off pretty quickly. (If you listened to a podcast around that time, you surely heard about Magic Spoon.)

“Then…a crazy thing happened in March 2020,” says Chandler. “With pandemic lockdowns, there were suddenly a lot more people buying food and beverages online. This remained the case through 2021.”

When pandemic restrictions loosened in 2022, Magic Spoon’s business mostly reverted to normal…whatever normal even meant. Because, really, the tailwinds behind the business were different if you were looking at data from 2019, 2020-2021, then 2022. Other confounding variables: Magic Spoon was pushing into retail, which changed their business. Plus, the launch of iOS 14.5 complicated things even more.

The bottom line: An avalanche of macro factors rendered much of Magic Spoon’s historical data irrelevant. How would you tune an MMM based on the many ups and downs DTC brands experienced from 2020 to today?

“Fundamentally, what models thrive on is stability,” says Chandler. “And you’d be hard-pressed to find a business that’s been very stable over the past five years.”

MMM data is confounded by multicollinearity

Pencils out, it’s stat jargon time. (Kidding.) While multicollinearity might take a second to sound out, it actually sort of means what it sounds like. It just means multiple marketing factors are moving in the same direction at the same time ("collinearly").

“Models function best when dealing with one variable,” explains Chandler. “This is known as ‘variable isolation.’ So say the outcome you’re tracking is sales. What a model wants to see is that a single thing changed to produce that outcome.”

But most businesses just don’t operate that way. If you’re gearing up for Black Friday, you probably aren’t going to increase spend on one channel and then keep others static. You’ll typically push spend up across all your channels at the same time. The same goes if you’re cutting spend. Your spend levels tend to be collinear.

But if all channels are moving up and down at the same time, a model may struggle to understand which channel is leading to a change in KPIs. Traditional MMMs can’t discern whether revenue is up because of Channel A or Channel B. So if you feed this scenario into two different MMMs, you might get two opposite recommendations on where to invest your budget. Garbage in, garbage out. Hence, the lack of trust.

Luckily, a Causal MMM can help you get around this issue. We’ll explain.

Our MMM data is flawed — so now what?

Phil says that there are two main approaches to getting around flawed data fed into MMMs. You can either:

- Add more assumptions, or

- Get better data

Let’s break down the pros and cons of these two options. (Hint: Haus prefers one of these options over the other.)

Method 1: Add more assumptions to your model

In this scenario, an MMM vendor might add assumptions about how these variables interact with each other. Assuming, for example, that Channel A can only impact revenue by driving effectiveness of Channel B restricts the options for the model to explain the data.

A certain amount of assumptions are always required to make working statistical models. For example, standard MMMs assume things like diminishing returns to spend. But the more assumptions you add, the more human judgment impacts the results. Identification becomes less about the data and more about the model.

Method 2: Ground your model in better data

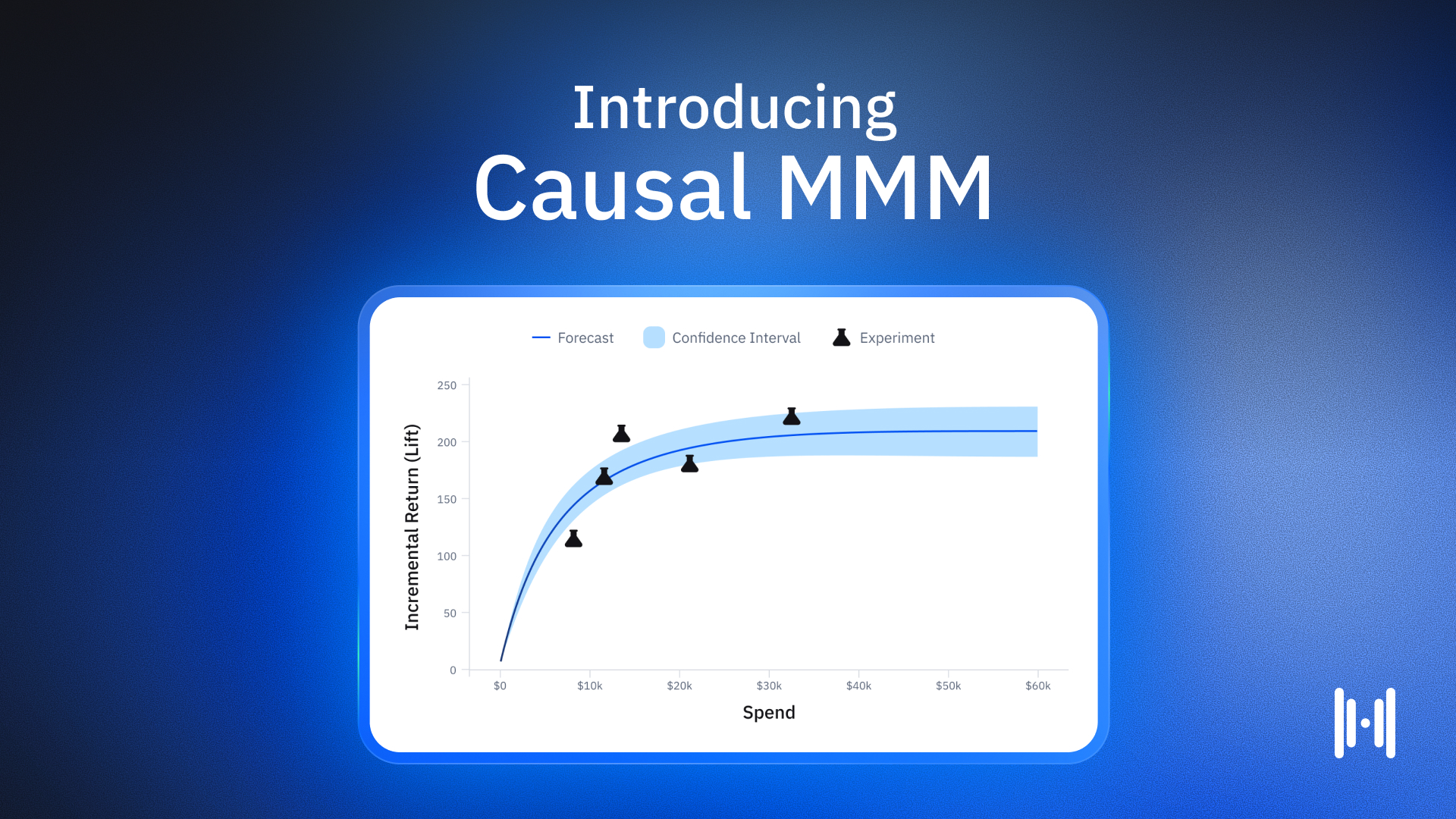

Haus leans heavily into the “Get better data option.” And when it comes to measuring incremental impact of marketing spend, the best data you can get is Haus GeoLift experimental data, full stop. (We never said we were humble.) It’s the gold standard for a reason — it actually unpacks the causal impact of your marketing. That’s why we ground our Causal MMM in experiments.

Using experiments as priors to improve MMMs is not new — and it’s the right direction. But the Haus MMM approach takes it a step further. Rather than using experiments as suggestions, we use proprietary algorithms to treat them as ground truth in our models. We start with experiments, then let observational data fill in the gaps.

The more experiments you have to draw from, the more powerfully informed your MMM will be. That’s why a strong experimental roadmap will always be key as you get started with Causal MMM.

What if my team hasn’t run many experiments?

It’s the inevitable follow-up question and it’s a fair one. We can talk about experimental data until we’re blue in the face…but you might be at a loss if you haven’t had the chance to run many experiments yet.

“We’re still waiting on experimental data” isn’t exactly a winning response when the CFO slides into your Slack DMs to check in on quarterly goals.

That’s where priors come into the picture. Allow us to explain.

The pros and cons of priors in MMMs

Priors are essentially just prior beliefs that help inform your model assumptions. These can be based on historical data sets from your business. For instance, maybe a marketing team knows from past campaigns that paid search spend usually has fast, diminishing returns. The first dollars spent are very effective, but after a point, extra spend has a much lower incremental effect.

In the MMM, instead of letting the model infer any arbitrary shape of the paid search response, the team might place a prior that biases the curve toward more concavity.

The problem? This data point isn’t based on experiments. (After all, you haven’t run many yet.) You might be basing this prior on platform data, which is confounded because platforms are grading their own homework, which means their reporting will often inflate impact.

Another problem with priors is that they’re out of marketers’ control. Consider that every Bayesian MMM uses priors — so if your vendor isn’t asking you about them, they’re making up assumptions on your behalf. These “hidden priors” could be misguided and affect your MMM results in ways you don’t have any visibility into.

For these reasons, priors are towards the bottom in our tiers of data quality. But they still can serve a useful purpose sometimes: They offer a boundary for your results.

Priors as bounds for your model

If you’re not working with much experimental data initially, priors can be a useful way to bound results. It gives us an idea. Given these prior beliefs, it makes sense that the estimate will fall somewhere between these bounds.

But it’s important to remember that priors are weighted as guardrails. Often expressed as a range, these priors keep model results within reasonable ranges. And not all priors are built the same. The model should be more responsive to the priors you’re more confident in, and less responsive to the priors you’re less certain about.

You do need some priors to tune the model's parameters because priors — when combined effectively with experiments — can help bound the model's outputs and make it more trustworthy.

What about the channels I can’t test?

Being early in your experimental roadmap isn’t the only reason you might lack experimental data — you also might be unable to test certain channels. For instance, testing sponsored content from creators isn’t always straightforward. Your unique business conditions can also make it infeasible to test certain channels.

When it comes to MMMs, these gaps in your data might be concerning. Are you expected to just punt on certain channels in your MMM?

Nope. There’s a fairly simple workaround here: Test the channels you can test. Continuously testing those testable channels can improve the model's estimates for untestable channels.

After all, untestable channels are estimated relative to everything else. If the model has poor certainty on testable channels, the uncertainty propagates, and attribution to untestable ones becomes noisy. But once testable channels are grounded in experiments, Causal MMM can better identify how the remaining channels explain the “leftover” variation in the data.

It’s like solving a puzzle: the more pieces you lock into place (via experiments), the fewer ways the remaining ambiguous pieces (untestable channels) can fit.

Always look for transparency

If your MMM is really only as good as the data that informs it, this presents an important task for marketing teams: You must be vigilant about transparency. You need to know what data are going into your MMM, where your priors are coming from, and what role they’re playing in determining your marketing returns.

If you’ve ever written off MMMs as black boxes built on flawed data, you aren’t alone. Luckily, opting for an MMM grounded in experimental data can clear up a lot of those transparency concerns. You’ll have visibility into your data because it’s drawn from the very experiments you’ve been running. You’ll be putting trustworthy data in and getting trustworthy recommendations out.

After that, the next step is pretty straightforward: Make informed, confident decisions that push your business forward.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)