Raise your hand if you’ve ever lost sleep over a marketing campaign. (We can’t see you right now, but we’re guessing your hand is up.)

Now raise your other hand if it’s specifically measurement that keeps you up at night. (Okay, you can put your hands down. People are staring.)

We get it — marketing measurement isn’t easy. Today’s customer journey is a tangled web of cross-channel touchpoints. Plus, a steady drumbeat of new privacy regulations has made it harder than ever to actually understand this journey. That’s why more and more marketers are ditching outdated attribution models and choosing incrementality to measure their marketing.

Incrementality testing takes an experiment-driven approach, which helps you understand the true, causal impact of your campaign. Did seeing a marketing campaign cause a customer to make a purchase? If the answer is yes, that’s an incremental conversion.

This comprehensive guide is designed to help you get started. We’ll lay out the shortcomings of traditional models, the benefits of incrementality, and some tactical steps for building an incrementality testing plan. No, we can’t promise we’ll cure your marketing-fueled insomnia — but we’ll get you that much closer to building a modern, science-backed measurement practice.

Today’s marketing measurement hurdles

In 2025, marketing measurement is a bit like one of those American Ninja Warrior obstacle courses. You’re trying to understand your customers, but they’re too busy jumping from platform to platform. And just when you think you’re nearing the finish line, a new privacy initiative pops up and blocks your path to accurate measurement.

It’s an exhausting process — and many of today’s popular tools aren’t ready to meet the moment. So before we dive into the benefits of incrementality testing, let’s first look at why so many of today’s tools are frustratingly outdated.

Traditional measurement limitations

First, let’s rewind. The year is 1992. A man (probably wearing a flannel shirt and listening to Nirvana on his Discman) sees an ad for a candy bar on the side of a city bus. He wasn’t originally planning on buying a candy bar, but the ad convinced him. So he goes into a store and he buys that candy bar. Boom: incremental conversion.

Fast-forward to today and the path to purchase isn’t so simple. Now a person might see an ad for that candy bar before a YouTube video — not even realizing that they had gotten an email about that same candy bar earlier that day. Which campaign convinced them to purchase?

For brands that are advertising on multiple channels or platforms, incrementality can offer answers. Otherwise, you might have to rely on platforms that mistake correlation for causation, which often leads to inflated, inaccurate results — and inefficient ad spend.

Privacy updates change everything

It’s not just that the customer journey is more complex. Growing support for consumer privacy protections have also complicated things. Laws like the General Data Protection Regulation (GDPR) in the EU and the California Consumer Privacy Act (CCPA) are affecting how companies collect, store, and use consumer data.

And no, this desire for enhanced privacy isn’t just going to go away. The proverbial horse is out of the barn. Public sentiment and legislative momentum mean the laws will keep coming. Marketers have no choice but to accept this reality and evolve their tactics.

Luckily, platforms like Haus are privacy-durable. That’s because incrementality tests don’t rely on pixels, PII, or other vulnerable information. Instead of asking “How is our campaign affecting this user’s behavior?” incrementality tests geo-segment conversion data so that consumer behavior and business outcomes are analyzed through regional test and control experiments.

Existing tools aren't cutting it

Marketers have no shortage of measurement tools at their disposal. The problem is that these tools lack accuracy, don’t account for new privacy regulations, and don’t show causation — meaning they don’t tell us if an outcome happened because of an ad.

So let’s walk through these options one by one and explain how they come up short.

Platform reporting

If you’re relying on the platforms themselves for conversion data, you’ll need to take it all with a big grain of salt. After all, these platforms are grading their own homework — and will happily take credit for a conversion that would have happened anyway, without an ad. Because of this over-reporting of ROI, many marketing teams end up spending way too much on these channels. Meanwhile, incrementality testing will tell you how many conversions they actually drive so you can optimize your spend.

Multi-touch attribution (MTA)

MTA was billed as a cure-all for traditional attribution’s flaws. But MTA relies way too much on cookies and pixel tracking, which is a lot harder given today’s privacy regulations. Plus, MTA tends to underrate the upper funnel and bases its metrics on correlation, which still doesn’t explain the true impact of your marketing. Oh, and it doesn’t do well accounting for seasonality, which can drastically affect your conversion rates.

Traditional media mix models (MMMs)

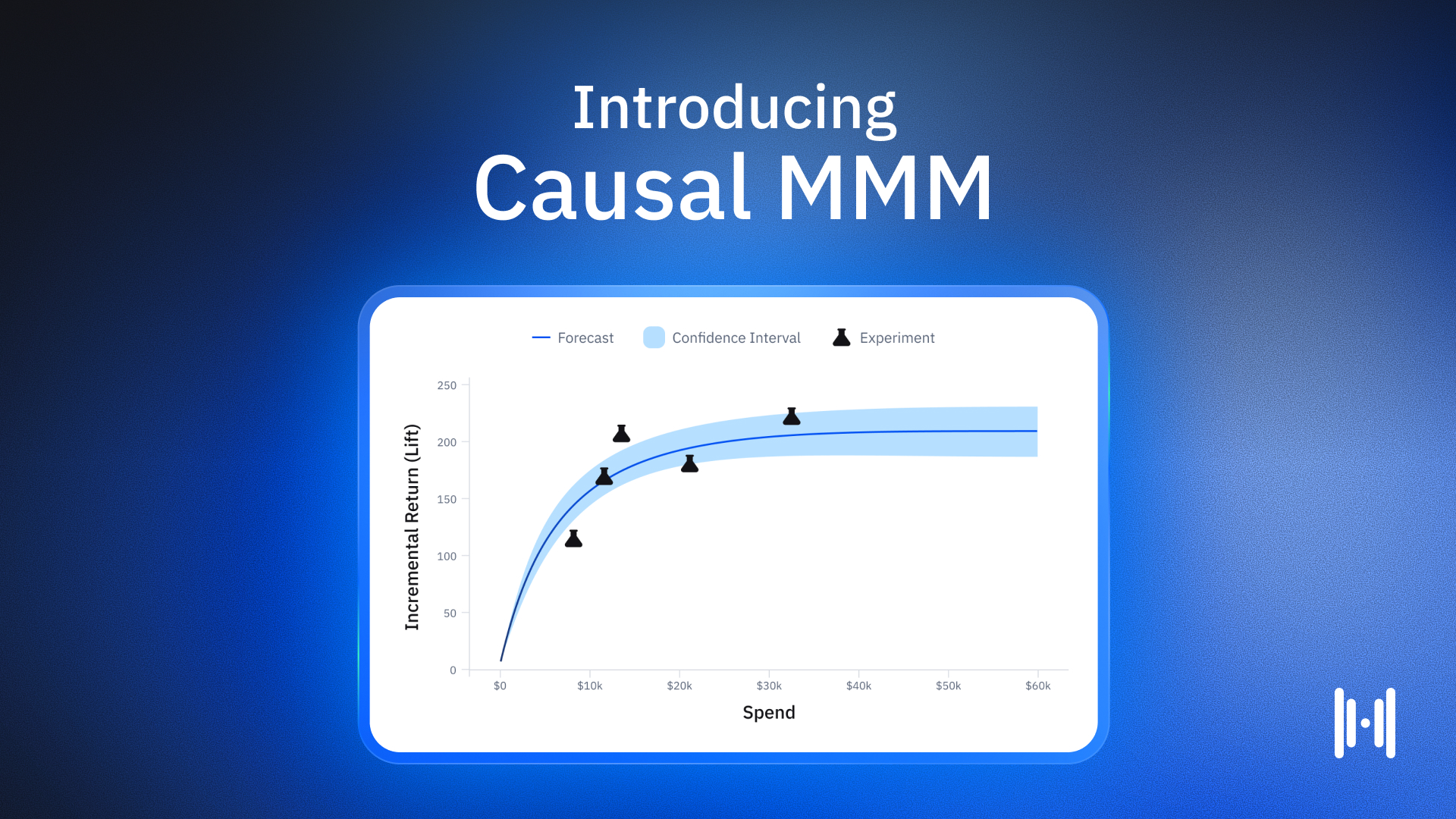

Traditional MMMs rely on aggregate daily KPI data, which doesn’t give the model enough data to make precise conclusions. (That’s why traditional MMMs rarely give a confidence interval with their results — it would just be too wide of an interval.) They’re also another model driven by correlation, which doesn’t offer an accurate read into marketing performance. The cherry on top: traditional MMMs tend to be “resource-intensive,” which is a fancy way of saying “expensive.” For all these reasons, we're building a causal MMM.

Okay, now that we’ve completed our roast of traditional measurement solutions, let’s explain how incrementality can help you overcome these marketing hurdles.

The power of incrementality testing

Brands have gravitated toward incrementality testing because it’s powered by causality, it’s privacy-durable, it’s based on current sales data, and it’s fast. With the right mix of incrementality tests, brands are able to allocate budget efficiently and maximize growth.

But before we go any further, let’s make sure we know exactly what incrementality is and how it tends to work.

So, what is incrementality?

Haus’ Principal Economist Phil Erickson offers an elegant definition: Incrementality measures how a change in strategy causes a change in business outcomes.

For instance, if we invest more in a certain channel, how does that affect conversions? What about if we prioritize more upper-funnel creative? Or include branded search terms? When it comes to testing, the options are pretty much endless.

So we get what incrementality does. But what does it look like in practice? Here we like to use the analogy to randomized control trials for drug development. In such a trial, one group gets the drug (i.e. the treatment group) and one group gets a placebo (i.e. the control group). Then you measure both groups’ reactions to understand the efficacy of the drug. If both groups have similar outcomes, the drug likely isn’t very effective.

An incrementality test is the same sort of thing (minus the drugs). Instead, the treatment group sees a marketing campaign, while the control group doesn’t. Then you compare KPIs. Did the treatment group convert significantly more than the control group? If so, the ad was incremental to some degree. If there was minimal or no lift, that might be a campaign worth rethinking.

Incrementality: The hard way

You’re sold on incrementality and have decided to start your own in-house incrementality program. We’ll warn you: It won’t be easy. To ensure you reap incrementality’s rewards, you’ll need to nail the following steps.

1. Assemble an expert team

Your org is likely packed with growth marketing experts. But — all due respect — it’s less likely your team is full of experts in causal inference. And that’s okay. But in order to run powerful incrementality tests, you’ll need advanced data scientists to design an experiment that controls for variables, reduces bias, and selects randomized samples.

In short, you need a team with a wealth of experience running rigorous experiments. This will require recruiting and hiring talented PhD-level data scientists and economists.

2. Hone in on your goals

Now that you’ve spent seven figures assembling the Avengers of causal inference expertise, it’s time to put their brain power to use. That starts with setting a clear experimental goal. Just saying “I want to know if my marketing is working” won’t cut it. You can test lots of different things. You may go a bit more general and test if a new channel is incremental. Or you may go a bit granular and test to see if you should include branded terms in your PMAX campaigns. (We have…lots of thoughts on that.)

You’ll probably want to hire some strategists who can help you prioritize experiments and build a testing roadmap. The more clear and focused your goals are, the more likely you are to surface useful insights. (Spoiler alert: If you cut corners here, you’ll have a much harder time uncovering valuable takeaways.)

3. Structure the exact experiment you need

Once you’ve figured out the first experiment you want to run, it’s time to pick test groups. These will vary based on the question you’re trying to answer.

For instance, say you want to figure out if TikTok is incremental. You’ll want two groups: one that gets the TikTok campaign and one that doesn’t. In Haus-speak, this is known as a 2-cell geo-holdout. “2-cell” just means you have two groups, and geo-holdout means one region doesn’t receive the marketing intervention (e.g. control group).

Say instead you’re trying to figure out the optimal spend level on TikTok. Then you need three groups — two treatment groups and a control. (This is called, you guessed it, a 3-cell test.) A common 3-cell test involves one group that receives your business-as-usual (BAU) TikTok ad spend, another that receives double this BAU ad spend, and then a third-group that receives no TikTok ads.

Bottom line: You’ll need an incrementality platform that lets you easily toggle between 2-cell tests and 3-cell tests, with or without holdouts.

4. Don’t forget to control for… everything

If a drug company is testing new allergy meds, they would never choose a control group that consists of people with a long history of allergies, and a treatment group full of people who have never experienced allergies. That would introduce a big, fat, confounding variable.

The same goes for incrementality testing. You want control groups and treatment groups that are very similar. Use frontier econometric methods to create characteristically indistinguishable groups. And be sure to take the extra step of cleaning up your data to soften noise, account for seasonality, and weed out outliers, which ensures your metrics are accurate and actionable.

5. Flexibly calibrate testing power

There are tradeoffs when it comes to experimental design. For instance, a four-week holdout experiment is more “powerful” than a two-week holdout experiment. That just means that the four-week experiment has a narrower confidence interval. We can be more confident in the results because we’ve collected more data in four weeks (compared to two).

That said, you might not always have the bandwidth to run a longer test. You might need results in two weeks, not four. And you might not feel comfortable turning off advertising in a region for four weeks.

So you’ll need to design a program that allows you to flexibly change your experimentation period and holdout size on an experiment-by-experiment basis — helping you find that sweet spot between quick results and reliable data.

6. Get your results — and use them

You run the experiment. A few weeks pass, then you get your results and email them to your team. Then? You all high-five and head out to happy hour to celebrate your first ever incrementality test!

Not so fast. It’s time to analyze those results and act on them. (Then you can go to happy hour. We promise.) Ultimately, your next move will depend on the questions you were trying to answer and KPIs you were tracking. Below are some possible moves to make after analyzing results.

Recalibrate platform metrics

After you complete an incrementality test, you’ll get a nifty metric called the incrementality factor (IF). Your IF signifies the proportion of total conversions that were incremental. So an IF of 0.6 means that if you had 1000 total conversions, 600 of those were incremental.

Applying this IF to your platform-attributed metrics is a great way to get a more realistic view of your marketing’s impact. After all, platform-reported metrics tend to overstate impact.

Refine channel budgets

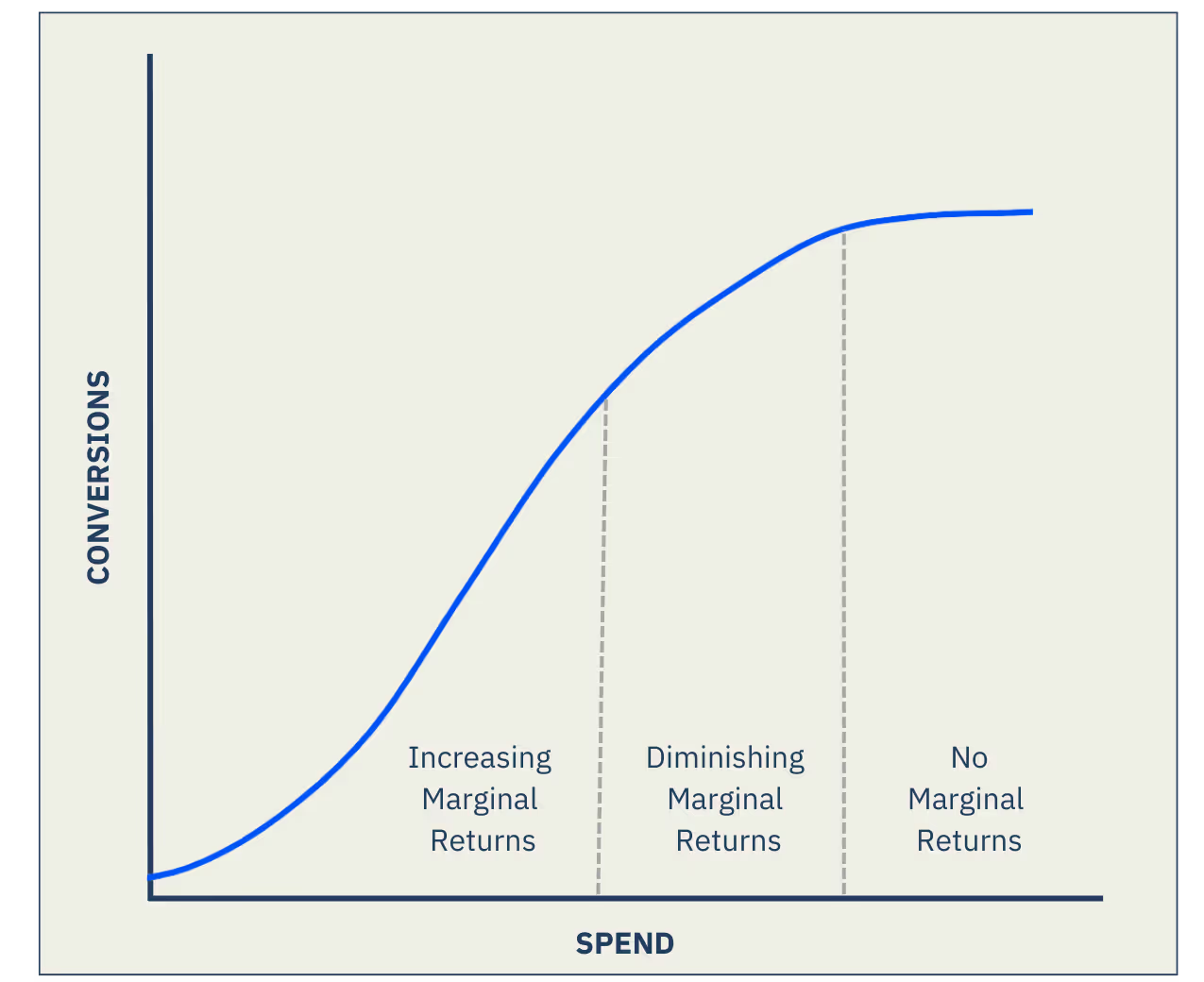

Are you over-spending on a channel? Under-spending? A powerful incrementality platform can give you the insights needed to correctly allocate your ad budget. For instance, you might learn that more spend doesn’t exactly equal more conversions. In which case, you can scale back the channel and lower your CPA, without lowering conversions.

Maybe your spend falls on the flat part of that line. Spending more doesn’t mean you’ll get more conversions, which means it’s time to scale back and save.

7. Plan informed future tests based on what you learn

Say you’ve learned you can scale back your spend on a certain channel. This produces an obvious next question: Where should we reallocate that ad spend? Maybe this money could go toward a brand new channel. You’ve always been YouTube-curious — so now’s your chance to run a 2-cell holdout test to see if YouTube’s incremental.

In short, insights tend to build on one another as you test more and more. Often you’ll find that answers you get from one incrementality test will inspire the central question of your next test. Before you know it, you’ll have developed a culture of experimentation.

Incrementality: The easy way

As you can see, incrementality is as easy as 1-2-3…4…5-6-7. Whew. Okay. Maybe it’s not that easy. Especially when step #1 involves assembling a dream team of causal inference experts. And that’s before you even get into building your own experimentation software then designing intentional, effective, non-noisy experiments.

Thankfully, there’s an easier, one-step solution.

Step 1: Choose a partner that already has those experts, already has an automated self-serve incrementality platform, and has already helped brands big and small save millions in ad spend.

That’s it. No more steps. With Haus, you can get up and running fast, then enjoy the following benefits that come with precise, expert-backed incrementality testing.

More accurate budget planning

No more basing decisions on correlative data, which can overstate or understate impact. Instead, you’ll be working off of your marketing’s true impact, then you can budget accordingly.

When it comes to testing, you can learn much more than just “Does this campaign work?” Maybe you want to find out if increasing spend by 10% on a certain channel moves the needle. Don’t be afraid to get granular, then zoom out and fill in the big picture. (Haus comes packed with experimental templates so that you can get started faster.)

A full view of omnichannel impact

If you only measure DTC, you might be missing out on some incremental conversions and underestimating impact. That’s why Haus ingests .com data and Amazon data so that you can understand your marketing’s true impact across all sales channels (including physical retail locations, too).

Preparation for the next big privacy initiative

Now every news article about privacy controls won’t fill you with dread. Instead, you’ll have left individual user-based tracking in the past and designed experiments based on group behavior — which anonymizes the single user. For more on how Haus helps teams handle the ongoing privacy initiatives, check out the tail end of this Open Haus episode.

An extra layer of precision

“Attributing changes in data (ex: ‘my KPI went up or down’) to certain factors (ex: ‘we upped spend in an ad channel’) is incredibly hard to do well, and extremely easy to screw up,” explains Haus’ Economist Simeon Minard. “The world is huge and chaotic, and there are a million things that could be messing up your measurement.”

That’s why Haus uses synthetic control methodology to take your precision from good to great. In fact, Haus’ results are 4x more precise than platforms that use matched market test design. Then, some extra data-cleaning and outlier management steps add yet another layer of precision.

Help when you need it

From laying out a testing roadmap during onboarding to interpreting your results, Haus’ team of customer success pros will make sure you’re getting the most out of your experiment — and preparing you for the next one. Have we mentioned they’re former growth marketers, agency pros, and platform experts? In other words: They know the ropes.

Haus passes the test for customers

As marketing science enthusiasts, it’s no surprise we like evidence. So instead of just telling you about Haus’ benefits, let’s show you how customers have used the platform to improve their measurement, surface valuable insights, and optimize their ad spend.

Ritual rethinks TikTok

Health and wellness brand Ritual wanted to know how incremental TikTok was for their business. To get some answers, they ran a 2-cell test in the Haus app and learned that TikTok drove no lift to their business. Instead of turning off the channel, they made some key changes to their TikTok ads then retested. These adjustments boosted lift from 0% to 8% — all at a highly efficient CPIA.

Jones Road Beauty finds YouTube’s true impact

Cosmetic brands Jones Road Beauty knew YouTube was a major driver of sales — but given the limited attribution capabilities of YouTube viewing on TVs, they wondered if the channel’s value was being understated. They ran a 3-cell test in the Haus platform, testing three different levels of YouTube ad spend. It turned out that doubling their YouTube ad spend led to 2.26X more new customer orders — a clear sign they could up their investment in the channel.

FanDuel finds a winning spend level

YouTube was a big factor for online sportsbook FanDuel — so they wanted to make sure they were getting their spend level right on the platform. To find that sweet spot, they set up a 3-cell no holdout test where a third of their markets had “low” spend levels, another third saw “medium” spend levels, and the final third saw “high” spend levels. They found that there was no difference in lift between the “medium” spend and “high” spend tiers. This meant they could stick to their medium spend knowing they wouldn’t be missing out on conversions.

Newton Baby uncovers key halo effects

Infant sleep brand Newton Baby sees a large share of sales on Amazon. To better understand their TikTok’s incremental impact on Amazon and .com sales, they ran a 2-cell geo experiment for 4 weeks. They realized that only looking at direct sales from TikTok understated the channel’s impact. When accounting for Amazon sales as well, they realized they could increase their spend.

For more examples of Haus’ impact across industries, check out our library of success stories.

Getting started with incrementality testing

For years, major brands like Netflix and Amazon have had science-backed internal tools that measure incremental impact. But Haus believes these tools should be democratized so that any brand can get started with incrementality.

And no, Haus doesn’t just hand over the results and say “you do you.” Instead, we help you dive deep into your results and develop a complete view of your impact across all sales channels. This expert-backed approach is how we’ve helped marketing and finance teams reallocate millions of dollars towards what is working so they can make smarter decisions.

So as the terrain shifts under marketers’ feet, you can stick with outdated tools — or you can shift away from attribution and toward a culture of experimentation.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)