“How effective is my marketing?”

It’s a question marketers have been asking themselves since…well, the invention of marketing. And it’s the question that traditional media mix models (MMM) and incrementality tests aim to answer — but their methods for finding those answers are quite different.

In this guide, we’ll explore the differences between traditional MMM and incrementality testing, then outline some of the advantages and disadvantages of each method.

Understanding MMM

At its core, traditional MMM uses regression analysis to illustrate correlations between consumer activity and channel spending over time. To run this analysis, the model takes into account:

- Marketing spend across various channels such as TV, radio, print, and digital

- Baseline sales that would happen without marketing

- External factors including seasonality, competitor actions, and economic indicators

- Advertising carryover effects that show how long marketing impact persists

The output is a set of coefficients that represent the effectiveness of each marketing channel, allowing marketers to calculate metrics like return on investment (ROI) and make informed budget allocation decisions.

For example, traditional MMM might show that for every $1 spent on Facebook advertising, a company generates $3 in revenue. This correlation-based approach indicates that Facebook appears to be a highly effective channel, but it doesn't necessarily prove that Facebook advertising directly caused those sales.

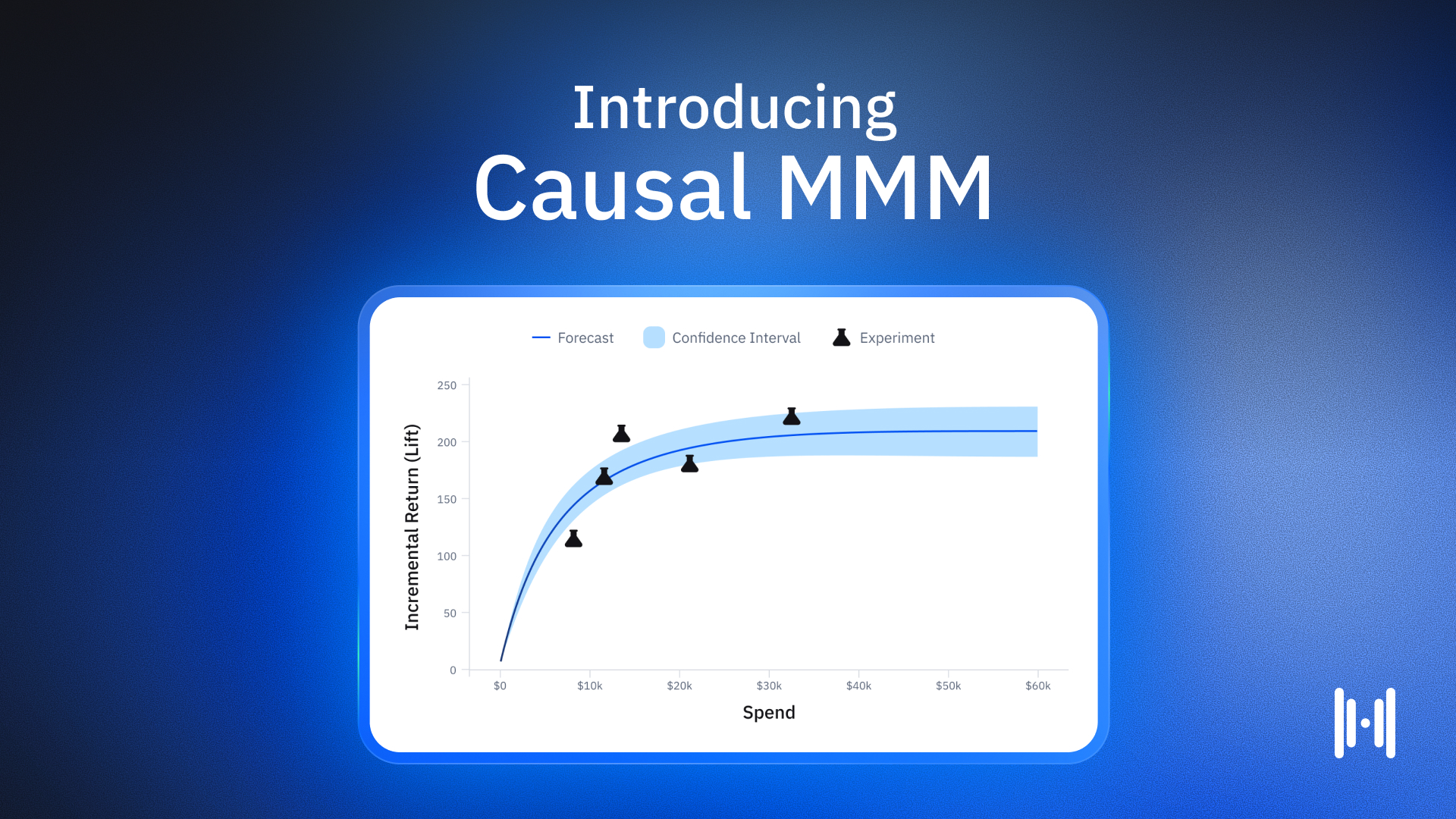

To measure the causal relationship between a marketing channel and sales, you’d need a model inherently tuned by high-velocity experiments — a Causal MMM. While a traditional MMM is rooted in historical correlational data, a Causal MMM is rooted in causal reality.

For more on the stark difference between traditional MMM and causal MMM, read over the five questions we think every marketing team should ask their potential MMM provider.

Why have marketers relied on MMM for so long?

Traditional MMM provides a holistic view of marketing effectiveness, offering a broad understanding of how all marketing channels interact and contribute to business outcomes. It captures both immediate and long-term effects of marketing activities, though its reliance on outdated data can lead to misguided recommendations. For this reason, marketers in our recent industry survey named MMM one of their least trusted measurement solutions

Marketers have also turned to MMM because it accounts for non-marketing variables that influence performance, such as economic conditions, seasonality, and competitive activities, providing context for marketing performance.

The not-so-great parts of traditional MMM

MMM requires substantial historical data — typically 2-3 years’ worth — to produce reliable results. For this reason, newer brands or brands piloting new products might have a difficult time driving value from a traditional media mix model.

Traditional MMMs also often struggle to provide granular insights, such as campaign, creative, or audience effectiveness. Plus, traditional MMMs are typically updated quarterly or annually, limiting real-time decision-making capabilities.

Perhaps most significantly, the correlation-based approach inherent to traditional MMMs may not accurately represent the true causal relationships between marketing activities and outcomes.

This limitation creates a practical problem: MMM might attribute strong performance to Facebook ads when in reality, the platform is simply showing ads to users who were already likely to convert. Without establishing causality, MMM could lead to overinvestment in channels that reach high-value customers but don't necessarily influence their behavior.

Incrementality testing solves a lot of these problems

Incrementality testing utilizes experimental design principles to measure the true incremental impact of marketing activities.

A target audience is divided into test and control groups; the test group is exposed to the marketing activity being measured while the control group is not exposed to the activity. The difference in performance between the two groups represents the incremental impact. This approach moves beyond correlation to establish causality by isolating the specific effect of marketing interventions. It answers the question, “If we didn’t run this campaign, what conversions would we have lost out on?”

“With Haus, we have a counterfactual to understand what would have happened in the absence of a given marketing intervention,” explains Haus’ Chief Strategy Officer Olivia Kory. “What was that group going to do anyway? That’s fundamentally what we mean when we talk about incrementality testing.”

A revealing example from practice: A company might discover through incrementality testing that their Facebook ads, which appeared highly effective according to platform-reported metrics, actually only generate $0.50 in incremental revenue for every $1 spent — far below what the platform originally reported.

This disparity occurs because Facebook's algorithm is excellent at finding users who would have purchased anyway. It’s not until you’ve run an incrementality test that you can figure out how many of these conversions would have happened even without the advertising exposure.

For this reason, you can’t rely solely on platform-reported metrics to tell this story — after all, these platforms are “grading their own homework” and will happily take credit for conversions that would have happened anyway.

Incrementality testing is tricky to implement yourself

Despite its advantages, incrementality testing comes with implementation challenges, requiring careful experimental design and technical infrastructure. Building an in-house culture of experimentation likely requires a team of highly trained economists, data scientists, and causal inference experts.

For this reason, many teams look for an incrementality partner who can work alongside them to design and run tests. Ideally, this partner doesn’t just toss you on the platform and let you sink or swim. Instead, they work alongside you to run creative experiments, gather insights, then put them toward improved business outcomes.

Integrating MMM and incrementality

More and more teams are using incrementality test results to validate MMM findings and refine model parameters. A balanced decision framework might leverage MMM for strategic, long-term planning while using incrementality for tactical, channel-specific optimizations.

But of course you’ll still run into the main downside of traditional MMM: The relationships illustrated between marketing channels and sales outcomes are correlational, not causal. That’s why brands are looking for an MMM based on causal relationships. In the case of Causal MMM, that means building an MMM that treats experimental results as ground truth.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)