What are the benefits of marketing experiments?

Marketing experimentation helps answer the fundamental business question: Does this actually work? Instead of relying on gut feel, internal dashboards, or channel reports (which often grade their own homework), experiments let you establish cause-and-effect between spending choices and real business results.

A proper experiment isolates what would've happened anyway (the "counterfactual") from what actually changed thanks to a specific campaign. Done well, this gives you clearer guidance on where to spend budget, which channels to grow, and which tactics need a rethink.

What are some mistakes to avoid when running experiments?

Running a good experiment is far from straightforward — it requires a commitment to rigorous science. Cutting corners can lead to imprecise results, misguided takeaways, and flawed decisions. Here are some mistakes our Measurement Strategy team sometimes sees brands make:

- Rushing into experiments: Brands sometimes don’t take the time necessary on the front-end to really think through experimental design and clearly define what success looks like. Unfortunately, an experiment that tells you the wrong thing — or gives wildly imprecise results — is worse than no experiment at all. You become more confident, but possibly in the wrong direction.

- Shifting goalposts after seeing results: Teams often decide how they'll analyze results after seeing early numbers, which introduces subconscious (or overt) "p-hacking" and bias. Analysis should be pre-committed, not adapted on the fly to chase statistical significance or business-friendly results. Otherwise, you might run the test longer or slice the data differently until you find what you hoped for — making your conclusions unreliable.

- Misaligned KPIs and business objectives: Brands choose KPIs that look impressive but don't reflect real business priorities — like focusing on cost per click (CPC) or percentage lift, instead of actual incremental sales or customer lifetime value (CLV). Surface-level metrics mislead. If your experiment shows a jump in clicks but no meaningful gain in incremental conversions, budget gets misallocated.

- Overreliance on statistical significance: Marketers often ask, "Is this result statistically significant?" as if passing a predefined threshold (commonly p < 0.05) is the only thing that matters. Statistical significance simply tells you the probability that your observed result could've happened by chance, assuming there's no true effect. It does not say whether the effect is important, reliable, or worth pursuing for your business. In marketing, decisions often have to be made with incomplete information and some risk.

What are some best practices to keep in mind when running incrementality experiments?

Of course, you want to avoid mistakes when running experiments, which is why we advise marketing teams to incorporate these best practices into their experimentation practice:

- Write down your exact analysis plan, KPIs, and success/failure criteria before running the experiment: Use automated pipelines when possible, keeping humans out of the critical calculation loop. This doesn't mean you lack flexibility, but decisions about the analysis should be made before you know the outcome.

- Tie every experiment to a meaningful business outcome: This could be incremental sales, subscriptions, profit, or similar metrics. When possible, measure cost per incremental acquisition or incremental return on ad spend (iROAS), not just relative or vanity metrics.

- Instead of chasing strict statistical significance, focus on expected value and confidence intervals: Ask: "What's the range of likely outcomes? How confident do we feel in the direction and magnitude of the effect? What's the risk if we're wrong?" Sometimes a result with 65% confidence is still worth betting on, if the upside is strong and the risk is limited.

- Before experiments run, agree on a decision framework: For instance, if incrementality and attribution diverge, which takes precedence? Test incrementality on the biggest spending channels and use those results to calibrate or replace attribution-based assumptions. As confidence grows, gradually shift toward fully causality-driven measurement.

What are some challenges to be aware of in today's marketing measurement environment?

Privacy and data limitations

Recent privacy regulations (GDPR, CCPA, iOS 14+) sharply limit user-level data and tracking, making many traditional measurement tools — especially multi-touch attribution (MTA) — increasingly unreliable or even obsolete. Modern experimentation must now be "privacy durable," often conducted at the aggregate (geo or cohort) level, and can't depend on invasive tracking.

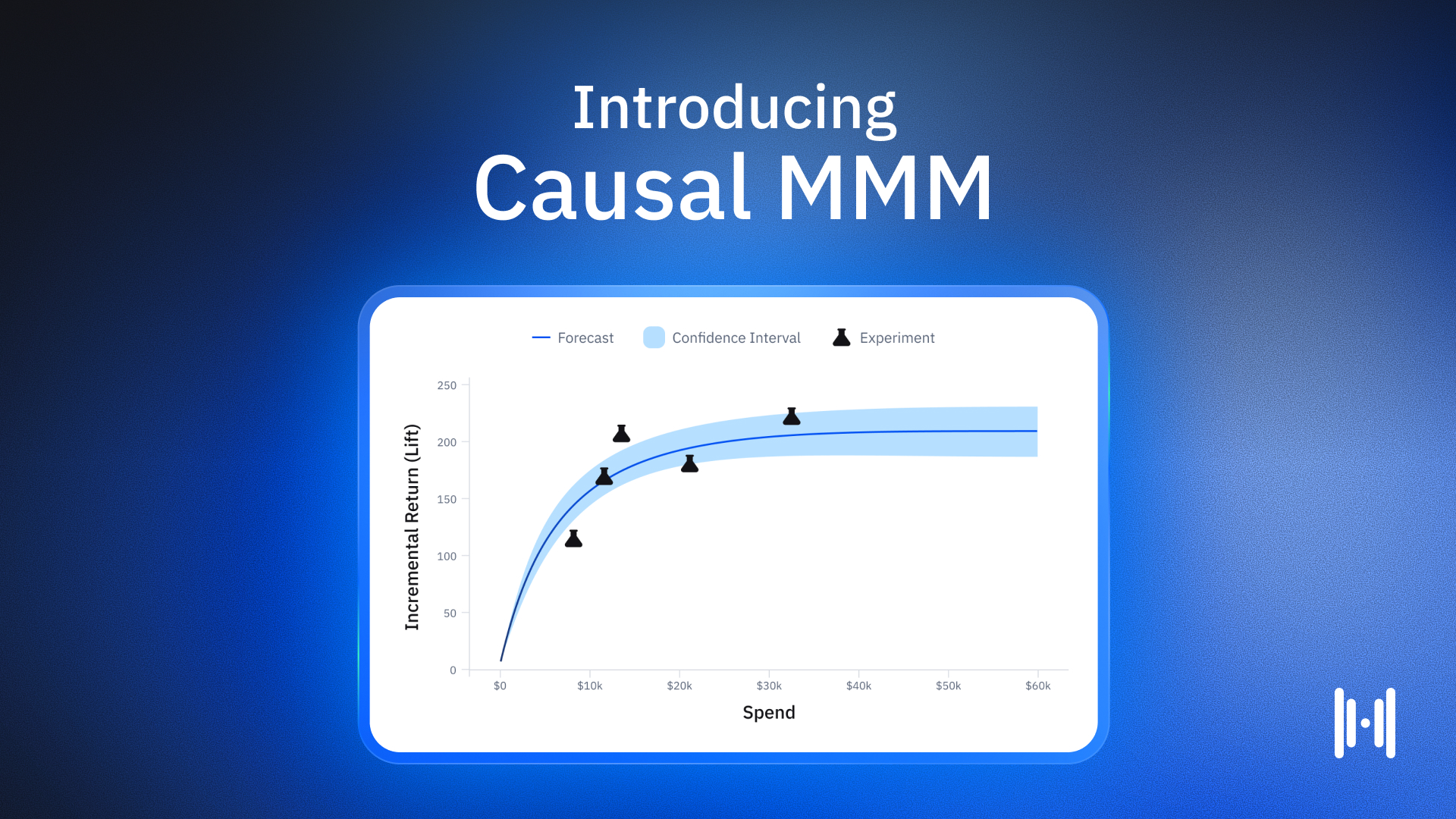

Choosing the right marketing mix model (MMM)

Traditional MMMs rely heavily on historical correlation, which can break down as consumer behavior, competitive landscapes, or media spend patterns shift. The best modern MMMs are grounded in experimental (causal) data. Experiments aren't just used to reinforce an MMM’s recommendations — they're built into the model, updating as business conditions evolve. When evaluating MMM software, look for:

- Core integration with incrementality experiments

- Consistency between model and experiment recommendations

- Actionable, customizable outputs for your actual business context

- Fast, efficient onboarding with transparent methodology

The hidden cost of false precision

Vendors that promise ultra-high "precision" without transparency often downplay randomness and noise. Overconfidence based on flawed models can cause wasted spend when teams act quickly, believing they have a sure thing. Accept and plan for uncertainty; demand transparency around model assumptions, standard errors, and test power.

Building a culture of honest, scientific experimentation

A healthy experimentation culture isn't about running "more" tests for the sake of it, or getting positive results every time. It means being transparent about what you know, what you don't know, and what level of risk is tolerable for your business. Uncomfortable or inconclusive results aren't failures — they're essential learning opportunities.

For an example of incrementality in action, check out Haus’ case study with Ritual. This well-known wellness brand explored TikTok as a new channel. Their first incrementality test (using proper controls) showed zero lift, contrary to expectations. Rather than abandoning TikTok or manipulating analysis, the brand changes creative and targeting, then retests. A subsequent experiment yields an 8% lift. As you can see, true incrementality testing helps them improve strategy, not just "report success."

Incrementality: The bottom line

Marketing experiments, run properly, are among the highest-leverage activities available to modern brands. They cut through platform bias, outdated models, and privacy challenges to deliver clear, actionable insights about what's really driving your business forward. But this only happens if experiments are rooted in causality, executed with care, interpreted honestly, and aligned with your true business goals.

Proceeding with intention and avoiding the frequent mistakes outlined here will lead to a stronger, more credible, and more profitable marketing measurement program. In a landscape where every dollar must count, don't guess. Design, test, and measure with discipline and transparency. Better decisions — and better business outcomes — depend on it.

TL;DR: Running Experiments

What are marketing experiments?

Marketing experiments establish cause-and-effect relationships between marketing actions and business outcomes. Instead of relying on gut feeling or platform-reported metrics, it uses controlled experiments to determine if marketing activities actually work. The core purpose is to isolate what would have happened without that specific campaign, providing clearer guidance on budget allocation and strategy decisions.

What is a culture of experimentation?

A culture of experimentation involves systematically testing marketing activities by creating controlled environments where one group receives a marketing intervention (test group) while another doesn't (control group). Proper design is key, which includes pre-committing to analysis plans, simulating experiments beforehand with historical or placebo data, ensuring proper group matching, and tying measurements to meaningful business outcomes rather than vanity metrics.

What are the four types of experiments?

While there are various ways to categorize marketing experiments, four common types include:

- A/B Tests - Comparing two versions of a marketing asset (like different ad creatives or landing pages) to see which performs better.

- Geo Experiments - Testing marketing interventions in specific geographic regions while keeping others as controls to measure regional impact.

- Holdout Tests - Deliberately withholding marketing from a segment of the audience to determine the true incremental value of that marketing activity.

- Incrementality Tests - Specifically designed to measure the causal lift or additional business generated by a marketing activity that wouldn't have occurred otherwise.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)