A few weeks ago, Ron Kohavi shared a post highlighting a core issue with A/B and conversion-lift testing on platforms like Meta and Google. The claim: Many of these so-called “experiments” aren’t truly randomized, and the results they produce can be confounded by platform delivery algorithms.

The underlying issue is what researchers now call divergent delivery — and for marketers, it’s something you probably already understand intuitively.

Let’s break down what it means specifically for growth marketers who rely on platform testing tools, why it matters, and how marketers should evolve their approach.

What is divergent delivery?

When you run a test inside a platform like Meta Ads Manager, here’s what you might think is happening:

- You create two creatives, Ad A and Ad B.

- The platform randomly assigns users to see one or the other.

- You measure results and declare a winner.

But here’s what actually happens:

- The platform labels users as eligible for Ad A or Ad B.

- Then it unleashes two separate optimization engines… one for each ad.

- Each engine tries to maximize performance for its creative by selectively delivering it to users who are most likely to engage.

So when you compare outcomes, you’re not seeing how the same audience responded to each creative. You’re seeing how two different audiences — selected by the platform’s black-box algorithms — responded to different creative-targeting combos.

And that’s where you lose Ron and the academic community.

Why divergent delivery matters

At a high level, this means you’re not testing “creative performance.” You’re testing creative + targeting algorithm behavior.

That makes a big difference. Especially when:

- One creative appeals to a niche audience that’s harder to reach

- The optimizer learns at different speeds for each creative

- You’re measuring metrics like CTR or CVR that are influenced by who saw the ad

And here’s the kicker: A small change in audience composition or week-to-week algorithm learning can flip the outcome. The platform might tell you Ad B won — but that could just be because it found an easier audience to serve.

As Ron and others have pointed out, that’s not a causal test. It’s a biased observational comparison dressed up as an experiment.

“The creative is the targeting.”

This phrase has started to circulate among smart marketers, and it captures the reality well. In these platforms, your creative defines who the algorithm chooses to show your ad to.

That’s not inherently bad. In fact, it’s great! We want to show the ad to the people it’s most likely to convert. But it changes what we can reasonably learn from a platform A/B test.

So… should I stop testing in-platform?

No, but you need to interpret those tests for what they are:

✅ Reliable for:

- Getting directional signal on how different creatives interact with the algorithm

- Understanding performance within the platform’s ecosystem

- Iterating quickly on variations to engage different audience pockets

🚫 Not reliable for:

- Measuring causal lift

- Understanding business-level impact (revenue, signups, etc.)

- Making decisions about which strategy to scale long-term

But what about platform holdout studies?

You might be wondering: Does divergent delivery apply to holdout studies, too?

In most cases, no. Holdout studies work differently from A/B tests, measuring the overall impact of showing any ads versus none. Since you're assessing account- or campaign-level performance, and not comparing specific ad creatives, there’s no divergence in delivery. The targeting is part of what’s being measured, not a source of bias.

As a result, holdout tests generally do measure causal lift. But they’re not perfect.

Even in holdout studies, you’re still relying on platform-defined conversion signals, which may not reflect your full business impact. For example, if you’re an omnichannel brand, that “lift” might only reflect conversions on .com — not Amazon or retail. So while you’ve eliminated one source of bias (divergent delivery), you still need to be thoughtful about what’s being measured, and what’s being left out.

This is where GeoLift complements platform holdouts: by measuring aggregate business outcomes — not just what the platform tracks.

At Haus, we built GeoLift to solve this problem, among others

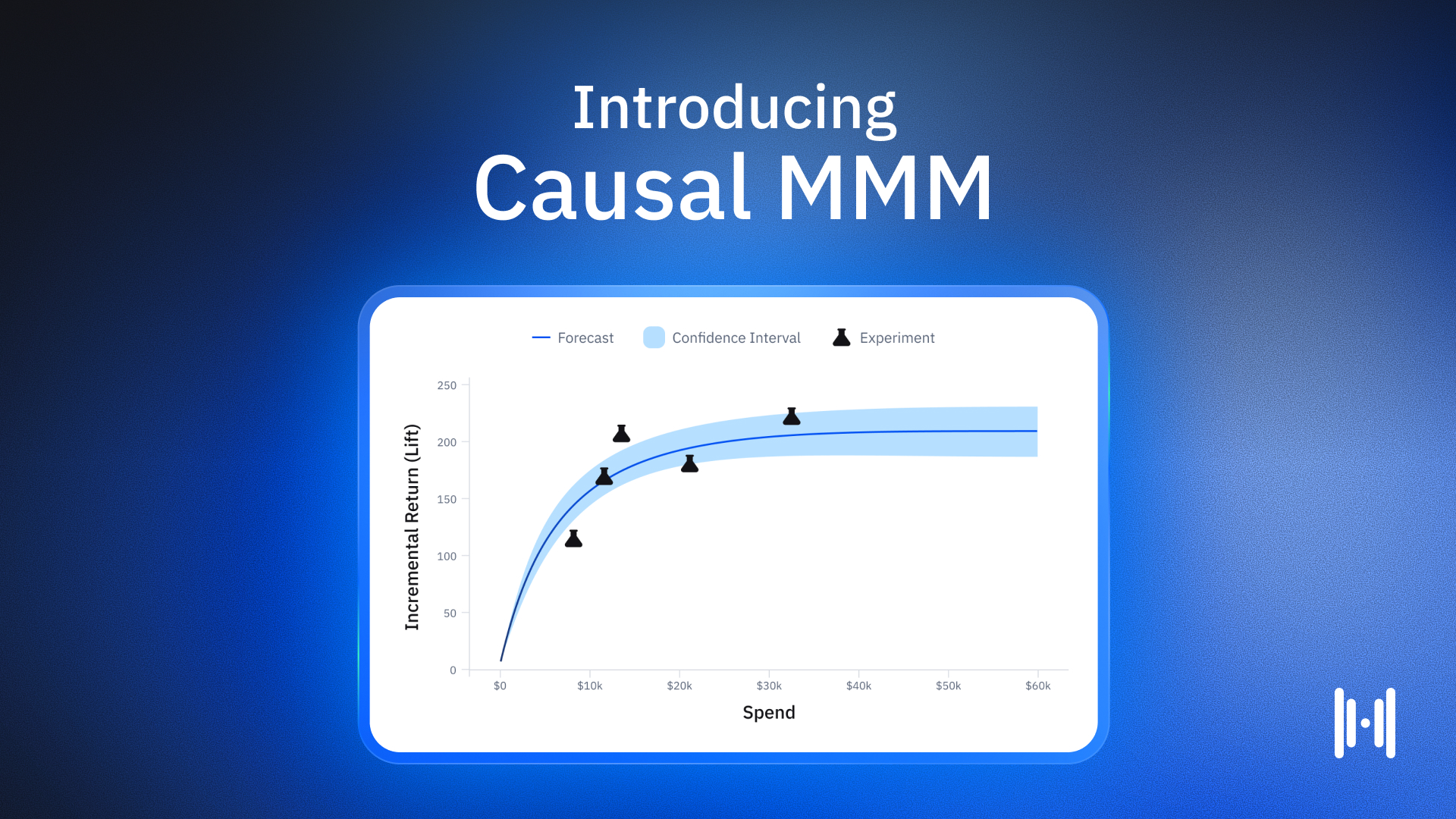

GeoLift tests randomize at the regional level, not the user level, inside the platform. This means:

- The platform doesn’t get to decide who sees your ad — you do.

- We measure business outcomes, like conversions or revenue, in aggregate across entire geographies.

- The results reflect what actually happened in the real world — not just what the platform’s optimizer wanted to happen.

GeoLift measures the intent-to-treat effect, which answers:

“What happens to my business if I turn this campaign on for this population?”

Not:

“What happened to the subset of users the platform decided were worth serving ads to?”

And that distinction is crucial, especially if you’re doing high-stakes testing like:

- Creative mix strategy

- Campaign scaling decisions

- Cross-platform investments

The bottom line on platform creative tests

A lot of this comes down to semantics, but those semantics matter.

From an academic perspective, platform creative tests may not meet the strict definition of a causal experiment. From a growth marketer’s perspective, they’re a useful — if imperfect — tool for optimizing performance within the bounds of the algorithm.

Both can be true.

But here’s the important distinction:

- If you want to know which creative and audience combo performs best on Meta, then in-platform tests can help — just be mindful of what they’re actually measuring.

- If you want to understand the incremental business impact of a creative strategy — independent of platform behavior, attribution quirks, or optimization bias — you need a different approach.

That’s where GeoLift comes in. It doesn’t just tell you which ad won the race that the algorithm designed. It tells you what happens when you turn the campaign on in the real world.

For marketers trying to scale efficiently, that’s the number that actually matters.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)