What is incrementality?

At its core, incrementality answers the crucial question: Did a specific marketing intervention cause a change in business outcomes?

Unlike attribution models that assign credit based on correlations, incrementality modeling aims to isolate whether your campaign was responsible for new conversions, revenue, or other key results.

Think of it like a clinical drug trial. In medicine, researchers give one group a treatment and another a placebo, then observe differences in health outcomes. If the treated group gets better more often, the drug likely works.

In marketing, the "treatment" might be a new set of digital ads or a price promotion, and the "placebo" is just the absence of that activity for a comparable control group. By comparing these groups, you discover if the campaign drove real, incremental results that wouldn't have happened anyway.

Why incrementality matters: Causality over correlation

In today's marketing landscape, measuring what matters means moving beyond surface-level metrics.

- Causality vs. correlation: Attribution models often count conversions that "follow" ad exposure, but that doesn't mean the ad caused the sale. Correlation doesn't equal causation — incrementality focuses squarely on causality.

- Budget optimization: Without incrementality, brands can waste budget on channels or tactics that seem to perform well but actually drive little or no incremental lift. This results in inefficient spend allocation and missed opportunities.

- Privacy durability: As privacy regulations disrupt granular user tracking, incrementality testing doesn't depend on user-level identifiers. It uses group-level tests (such as geographies or time periods), making it more resilient to privacy changes.

- Transparency and trust: Incrementality tests, when properly run, clarify which marketing levers truly move the needle. They create confidence for marketing leaders, CFOs, and stakeholders on what actually drives growth.

What can you test for incrementality?

Incrementality testing has applications across nearly every dimension of marketing strategy:

- Channel mix optimization: Should you invest more in Meta or YouTube? Is Amazon or TikTok more effective for your brand? Incrementality experiments can quantify lift by channel.

- Tactical adjustments: Should branded search terms be included in campaigns? Are video creatives more effective than testimonials? Test these variations to find the best performers.

- Budget levels: What happens if you double your spend — or cut it in half? Is there a point of diminishing returns for a channel?

- Seasonality and promotions: If you increase spend during your peak season, do you see a proportionate increase in conversions?

- Omnichannel impact: How does your marketing affect offline retail, Amazon, and direct-to-consumer (DTC) site sales differently? Are campaigns shifting revenue across channels or creating entirely new demand?

How to run an incrementality experiment

1. Define the question and scope

Pinpoint what you're trying to learn. For example: "Does increasing YouTube ad spend generate incremental sales?"

Clarify the variable you want to test, the outcome metric that defines "success" (e.g., revenue, sales, subscriptions), and the relevant customer segments or geographies.

2. Design the experiment

- Establish treatment and control groups: Define how large you want your holdout group to be. Choosing a larger holdout group will lead to more confident results — but will withhold ads from more of your audience, which could affect the bottom line.

- Select the testing variable: Decide what will differ between groups — ad spend, creative, channel mix, etc.

- Set primary and secondary KPIs: Make sure you're measuring something that matters for your business objective, such as new customer orders or revenue.

- Choose duration: The duration should be long enough to smooth out fluctuations but not so long that the cost of withholding marketing becomes burdensome. You could also include a post-treatment window.

Pitfall: Many brands make the mistake of using poorly matched test/control groups (e.g., regions with different demographics or seasonality). Ensure you’re working with an incrementality partner that uses synthetic controls for more accurate results.

3. Launch and monitor

Use automated and privacy-compliant systems to track key performance indicators (KPIs) across all relevant channels.

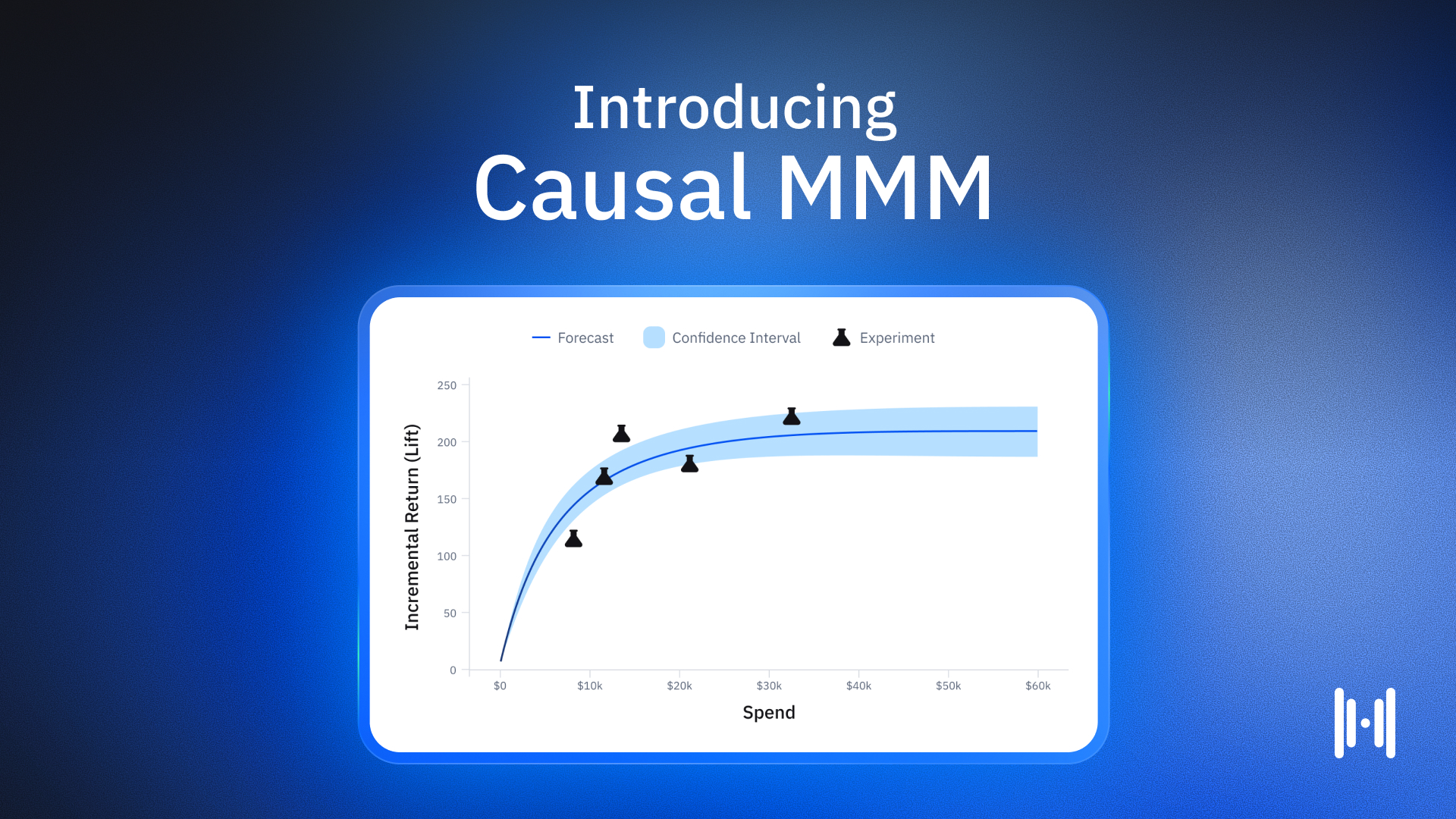

4. Analyze lift and incrementality

Calculate the difference in outcomes between the treatment and control groups. The traditional formula for lift is:

Incremental Lift = (Treatment Conversion Rate – Control Conversion Rate) ÷ Control Conversion Rate

Suppose your treatment group converts at 5.2%, and control at 3.8%.Incremental lift = (5.2 – 3.8) / 3.8 = 36.8% more conversions caused by your campaign.

5. Make data-driven decisions

Use your findings to reallocate budget, refine targeting, or test new tactics. Often, experiments reveal unexpected truths — for instance, that doubling spend yields diminishing returns, or that a channel's reported cost per acquisition (CPA) was grossly optimistic once you adjust for actual incrementality.

Example: A health brand spends seven figures monthly on Meta and Google. Post-incrementality test, they discover that lowering spend by 25% has no negative impact on conversions. They can safely reallocate or save that budget.

Key mistakes to avoid in incrementality modeling

Brands venturing into incrementality measurement often fall into several common traps:

- Misaligned KPIs: Measuring lift on vanity metrics (e.g., clicks) rather than true business outcomes like net new customers or revenue leads to wasted effort.

- Relying on false precision: Some incrementality partners promise unrealistic levels of certainty about campaign effect. Be careful of any claim suggesting near-total certainty; business data is always noisy, and uncertainty is a fact of life.

- Placing too much trust in platform metrics: Sometimes, a platform will credit itself for conversions that aren't actually incremental. Without reinforcing those assumptions through incrementality testing, you might make flawed budget decisions.

- Trying to do too much in-house: Accurate incrementality testing requires significant expertise in causal inference and machine learning, plus growth experts who can help you interpret results and action on them confidently. Working with a trusted incrementality partner is often more effective (and cost-effective) than standing up your own incrementality practice.

Building a culture of experimentation: Practical considerations

When deciding whether it’s time to invest in incrementality testing, ask yourself the following questions about your organization:

- Are you spending across multiple channels or platforms?

- Is your monthly marketing spend significant (e.g., over $1 million)?

- Are you selling across multiple sales channels?

- Are you experiencing a growth plateau that isn't explained by standard analytics?

- Are traditional marketing mix modeling (MMM) or multi-touch attribution (MTA) tools no longer providing actionable or reliable insights?

Large enterprises like Amazon have internal experimentation teams, but for most brands, building in-house is costly and complex. Third-party partners like Haus can provide advanced synthetic control, data onboarding, and analytical support for more reliable and actionable experimentation, while remaining transparent about limitations and uncertainty.

Privacy, trust, and transparency in incrementality

Measurement that relies on individual-level tracking is on the decline. Incrementality testing sidesteps this challenge, since it doesn't require personal identifiers or pixel-based tracking. Instead, it uses aggregated, group-level data, making it both privacy-compliant and durable for the future.

It's also important to work with partners who are transparent about test design, assumptions, standard errors, and confidence intervals. False precision — a partner telling you they're 95% certain of a result when data noise could make that impossible — can lead to costly missteps.

Here’s a checklist for trustworthy incrementality measurement:

- Demand transparency about methodology and precision; don't accept "black box" answers.

- Challenge recommendations suggesting dramatic budget shifts on flimsy evidence.

- Bring a data scientist or someone with a background in causal inference to the conversation.

- Ask for transparency around test power and standard error estimation.

Case study: Incrementality in action

Example: Ritual, a health brand, pilots TikTok campaigns but isn't sure if they really move the needle. Their first incrementality test showed no lift. Instead of immediately dropping TikTok, they adjusted their creative and retested during a higher-demand period. The new test reveals a positive incremental lift, validating TikTok as a viable channel — after some refinements.

This dynamic, experiment-measure-optimize cycle is at the heart of modern, incrementality-focused marketing strategy. Brands embracing this logic move beyond guesswork and legacy metrics, systematically discovering and scaling what works.

Conclusion: Embracing incrementality as a marketing foundation

Marketing measurement in 2025 is full of complexity — fragmented touchpoints, privacy headwinds, and opaque reporting. Incrementality modeling cuts through the noise, providing causal, privacy-durable insight into what your marketing dollars are actually accomplishing. By designing careful experiments, focusing on the outcomes that matter, and acting confidently where the evidence supports it, brands can power growth and avoid wasted spend. An incrementality practice is essential for any company serious about effective, transparent marketing decision-making.

FAQ: Understanding incrementality in marketing

What is incrementality in marketing?

Incrementality in marketing answers the critical question: Did a specific marketing intervention cause a change in business outcomes? Unlike attribution models that assign credit based on correlations, incrementality modeling isolates whether your campaign was actually responsible for new conversions, revenue, or other key results. It's about determining causality — whether your marketing efforts truly generated results that wouldn't have happened otherwise.

What is the concept of incrementality?

The concept of incrementality is similar to a clinical drug trial. In medicine, researchers give one group a treatment and another a placebo, then observe differences. In marketing, the "treatment" might be a new set of digital ads or a price promotion, and the "placebo" is the absence of that activity for a comparable control group. By comparing these groups, you discover if the campaign drove real, incremental results that wouldn't have happened anyway.

What is an example of an incrementality test?

Consider a coffee concentrate brand unsure whether its top-of-funnel (TOFU) or bottom-of-funnel (BOFU) Meta campaigns are more incremental. By geo-segmenting regions, they withhold TOFU ads in one region and BOFU in another. Orders are tracked on both Shopify and Amazon. The results might show that TOFU campaigns deliver significantly higher incremental conversions per dollar spent, allowing the brand to reallocate budget accordingly and improve overall efficiency.

How to measure incrementality

To measure incrementality effectively:

- Define your question and scope (what you're testing and what success looks like)

- Design the experiment with comparable treatment and control groups

- Launch and monitor the test, collecting data rigorously

- Analyze incremental lift

- Make data-driven decisions based on the results

What is incrementality testing vs MMM vs MTA?

Incrementality testing focuses on causality through controlled experiments to isolate the impact of specific marketing interventions. Media mix modeling (MMM) uses statistical analysis of historical data to attribute value across channels at an aggregate level. Multi-touch attribution (MTA) assigns credit to various touchpoints along the customer journey based on individual user paths to conversion. The key difference is that incrementality testing is specifically designed to determine causality, while MMM and MTA are primarily focused on correlation and credit assignment.

What is the difference between ROAS and incrementality?

Return on ad spend (ROAS) measures the gross revenue generated for every dollar spent on advertising, regardless of whether those sales would have happened without the ads. Incrementality, however, measures the net new revenue or conversions that occurred specifically because of your marketing efforts — excluding sales that would have happened anyway. ROAS can be misleading because it doesn't distinguish between caused and coincidental conversions, while incrementality specifically isolates true marketing impact.

Incrementality: The fundamentals

The fundamental principles of incrementality include:

- Focus on causality rather than correlation

- Proper experimental design with matched control groups

- Measurement of true business outcomes (not vanity metrics)

- Understanding statistical significance and confidence intervals

- Accounting for external factors and seasonality

Measuring impact: The beginner's guide to incrementality

For beginners approaching incrementality:

- Start with a clear, focused question about a single channel or tactic

- Ensure your test groups are truly comparable (similar demographics, behaviors)

- Run the test long enough to account for sales cycles and normal fluctuations

- Look beyond platform-reported metrics to true business outcomes

- Accept that perfect certainty is impossible — aim for actionable insights with reasonable confidence

- Start with larger tests before trying to measure small incremental effects

How do you measure incrementality?

To measure incrementality accurately:

- Establish comparable treatment and control groups

- Isolate the variable you want to test (spend level, creative, channel, etc.)

- Ensure proper test duration based on your sales cycle

- Monitor for contamination between test groups

- Calculate lift using the formula: (Treatment Result - Control Result) ÷ Control Result

- Apply statistical analysis to determine confidence levels

- Translate findings into business impact metrics (incremental revenue, profit, etc.)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)