It’s a Tuesday morning, and your marketing team is on Zoom for your weekly sync. But what started as a quick half-hour check-in soon turns into an hour-long argument over the subject lines in your latest email campaign.

Finally, your boss pipes up: “We’ll settle it with an A/B test.”

Whether you’re arguing about the syntax of a subject line or the color on your company homepage, teams frequently turn to A/B tests to optimize creative details. This dynamic comparison of two creative variants is one of the most trusted methods in a marketer’s toolkit.

But too often, marketers discuss A/B testing interchangeably with another popular form of testing: incrementality testing. So, what’s the difference?

While A/B testing compares variants within a campaign, incrementality testing helps you measure the campaign’s causal impact. Through randomized control trials, incrementality testing helps you figure out how many conversions were a direct result of your campaign. In short:

- A/B testing measures which variant of a treatment is more effective

- Incrementality testing measures whether the overall treatment drives desired business outcomes

Naturally, these two methodologies are complementary. You could run an incrementality test to see if upper-funnel Meta campaigns are an incremental channel for your brand. Once you’ve determined that it’s incremental, you could run an A/B test that helps you decide whether you should use Video Asset A or Video Asset B for your Meta campaign.

As channels multiply — from social to search — and campaigns get complex, you need micro-level insights on the details and macro-level proof of overall impact. So let’s explore the differences between these two testing methods and how they can work together.

Overview: It’s a difference of scope and insight

You’re starting a garden, but… you don’t know what you’re doing. So you decide that this first go-round, you’re just going to experiment and learn. To determine if you need fertilizer, you sprinkle fertilizer over one plot, then just stick to water and sunlight for the adjacent plot.

Three months pass, and you learn that you do, in fact, need fertilizer. Those tomatoes that didn’t get fertilizer are looking awfully paltry. So the following season, you use fertilizer — but you test out two different fertilizers.

This example underscores the fundamental difference between A/B testing and incrementality testing. A/B testing two different fertilizers doesn’t tell you if using fertilizer is more effective than not using fertilizer. And incrementality testing won’t tell you which fertilizer is most effective.

It’s a difference of scope: A/B testing provides feedback on variants within a given treatment, while incrementality testing assesses the treatment’s overall effectiveness. By holding out a control, you measure causation, not just correlation.

Practical differences: Incrementality testing vs. A/B testing

Not all experiments are created equal. When you choose a test, consider these trade-offs:

Each method has its place — run A/B tests for rapid creative feedback, then layer in incrementality whenever you need causal proof to optimize budgets.

The fundamental differences: A/B vs. incrementality testing

We’ll put it bluntly: Incrementality testing is just a bit more involved than A/B testing. An incrementality test result might lead to a significant change in strategy or budget allocation. That’s why incrementality testing is generally recommended for brands advertising on multiple channels and spending millions of dollars yearly on marketing.

For instance, FanDuel turned to incrementality testing with Haus because they needed to future-proof their business amid privacy changes. These were major changes that would affect the company for years to come. When they ran incrementality tests, they were able to adjust their investments and save millions of dollars.

An A/B test result can certainly affect strategy and budgeting, but rarely on that scale. A/B tests are more often used for fine-tuning after you’ve already settled on an overall strategy. An advantage? They’re quick and lean, while incrementality demands more data, more setup, and more expertise. That’s why many companies turn to an incrementality testing partner instead of handling it in-house.

A/B testing is great for optimizing creative details

A/B testing is your go-to when you want to optimize every element. When you swap a button’s shade or tweak a headline, you see which version resonates better — like auditioning actors on stage and tracking audience applause.

It’s perfect for testing one variable at a time (e.g., subject lines, button colors). Because you control exposure fully, you isolate which variation performs best — and get clear, actionable results fast.

Use A/B testing when you need rapid, low-cost insights on UI, copy, or layout tweaks. Most platforms handle the randomization, audience split, and reporting required for an A/B test — so you can focus on creative optimization.

But keep in mind: A/B testing can’t show what would’ve happened without any ad exposure — it doesn’t test the counterfactual. You'll need incrementality testing to prove true lift and net-new impact.

Ideal use cases for A/B testing

Here are a few scenarios where A/B testing really shines:

- Email campaigns. Swap subject lines or test CTA copy. For example, maybe you run two versions of your newsletter — one question-based, one benefit-driven — and compare open rates.

- Landing pages. Experiment with headlines or form placements. For instance, you might test a concise headline against a descriptive one, then track form submissions.

- On-site elements. Tweak button placement, color, or product descriptions. Try a sticky “Add to cart” button vs. a static one, then measure clicks to checkout.

Incrementality testing tracks how campaigns lead to sales

Incrementality testing measures the causal impact of your budget allocation across channels or markets, functioning as a randomized control trial for your marketing. These tests hold out a control group in order to measure how many extra conversions you drove — above what would’ve happened without an ad.

Haus’ Principal Economist Phil Erickson describes incrementality another way: “Incrementality measures how a change in strategy causes a change in business outcomes. For example, how would my revenue increase if I upped my ad budget by 10%?”

This approach scales to multi-channel campaigns, letting you see which channels are actually adding new customers, not just driving last-click conversions.

Use cases for incrementality testing

Here are a few common situations where brands turn to incrementality testing:

- Testing tactics: Maybe you’re trying to figure out the right mix of upper-funnel and lower-funnel creative. When Javvy tested this with Haus, they unlocked major growth.

- New channel pilots: Test before you go all-in on a shiny new platform. That’s what Ritual did before adding TikTok to their evergreen media mix.

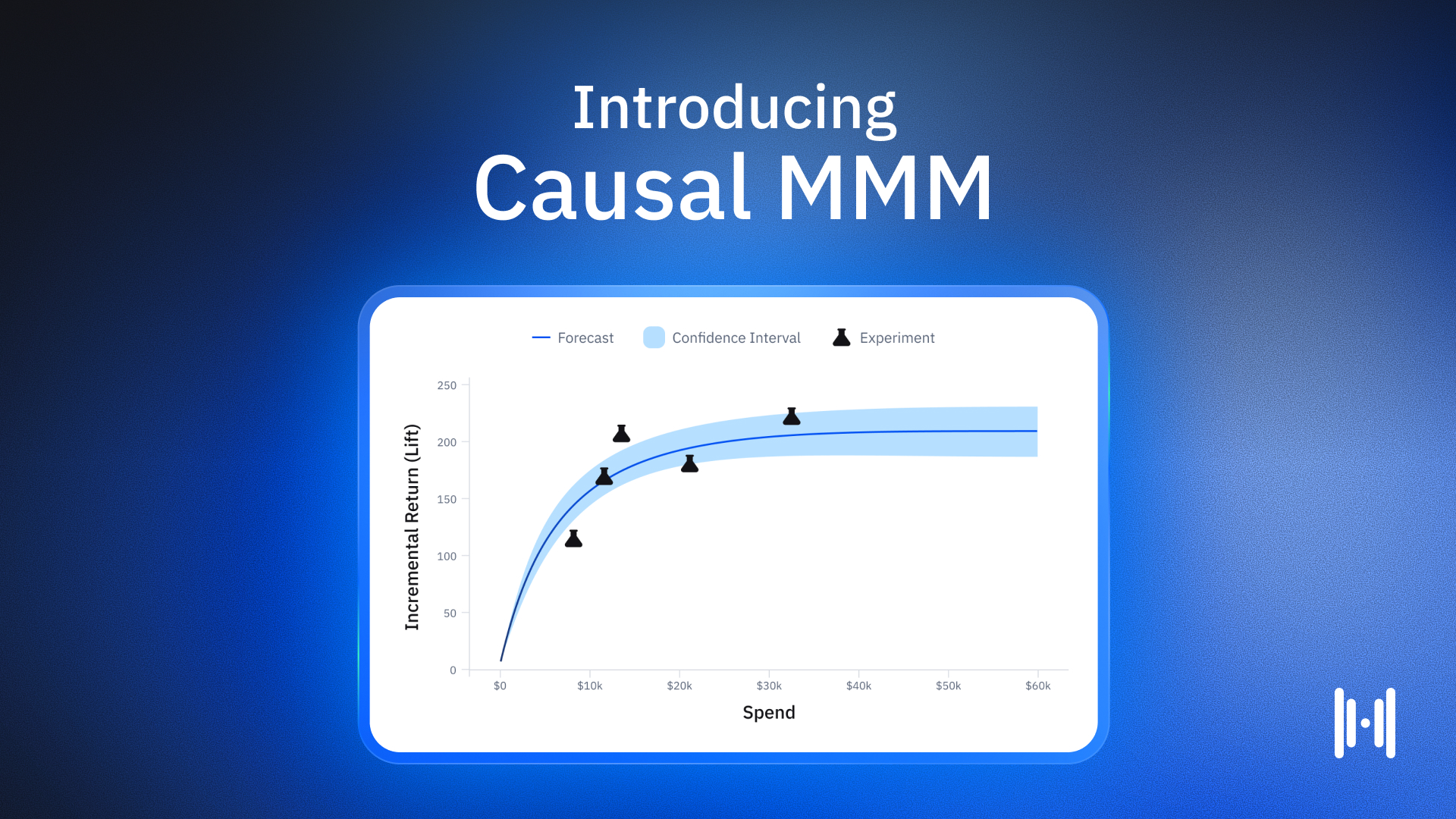

- Optimal spend level: Perhaps your team wants to find the point of diminishing returns to allocate budget efficiently. When FanDuel tested to find their diminishing return curve, they optimized YouTube spend.

- Retargeting validation. Confirm you’re creating new conversions, not retargeting the same shoppers. For example, see Lalo’s upper-funnel testing strategy.

- Identify halo effects: If you’re an omnichannel brand, you might want to know if your ads are driving customers to your official site, social channels, Amazon, or some combination. For Newton Baby, unpacking this cross-channel journey gave them a major ROI boost.

When to use A/B testing vs. incrementality testing

Here’s your cheat sheet: start with the question you need to answer, then pick the experiment that fits.

- Which headline converts best? Choose A/B testing.

- Did this channel add net-new customers? Choose incrementality testing.

- Which push-notification message maximizes click-through? Choose A/B testing.

- Does our referral bonus program bring net-new users? Choose incrementality testing.

- Which call-to-action button color yields more conversions? Choose A/B testing.

Keep these nuances top of mind as you plan experiments. Start by asking the right question, then apply the right method.

How A/B testing and incrementality work together

A/B testing and incrementality testing can be combined to produce even more powerful results. This involves randomly assigning users to different treatments while also holding out a control group that sees no treatment.

For instance, you could design a test where one-third of designated marketing areas (DMAs) see a PMax campaign with brand terms, one-third see a PMax campaign without brand terms, and one-third see no PMax campaign at all.

This was Caraway’s approach when they used Haus incrementality tests to maximize PMax performance. This 3-cell test design helps you not only determine whether you should include brand terms but also whether PMax campaigns are incremental more broadly.

Why Haus leads with incrementality

These unique differentiators have led more and more brands to trust Haus as their incrementality testing partner.

- Proven track record: Haus has optimized billions in ad spend for customers like FanDuel, Caraway, Intuit, Sonos, Ritual, Pernod Ricard, Jones Road Beauty, and more.

- Rigorous frontier methods: Haus uses frontier methodologies to maximize experiment accuracy and precision, like synthetic controls, which produce results that are 4X more precise than those produced by matched market tests.

- Time to value: Haus gets you up and running quickly so that you can have your first results in a matter of weeks.

- Dedicated team: Our teams of PhD economists, data scientists, and measurement strategists work with hundreds of large brands, running 3,000+ analyses each year.

- Omnichannel: Haus ingests Amazon and retail data to report total lift across all sales channels for every experiment you run, helping you measure the true impact of your marketing efforts even when your customers convert on DTC, Amazon, or retail.

FAQs about how incrementality experiments differ from A/B experiments

What is the fundamental difference between A/B testing and incrementality testing?

A/B testing compares two variants within an existing campaign to see which performs better, whereas incrementality testing measures the causal lift of an entire treatment by holding out a control group.

The key distinction is that A/B testing shows relative performance between options, while incrementality testing shows whether the activity drove results that would not have happened otherwise.

When should I use A/B testing vs. incrementality testing?

Use A/B testing for rapid, low-cost creative tweaks (e.g., subject lines, button colors) and incrementality testing when you need causal proof of net-new impact (e.g., validating channel effectiveness or budget allocation).

In practice, A/B testing optimizes execution within a channel, while incrementality testing evaluates whether the channel itself — or a major tactic — is truly worth the investment.

How can A/B testing and incrementality testing work together?

You can initially run an incrementality test to confirm a campaign’s overall lift, then follow up with A/B tests to optimize specific creative elements within that proven channel.

What practical trade-offs differentiate these testing methods?

A/B tests are quick, inexpensive, and DIY-friendly but only show relative wins, while incrementality tests require larger samples, deeper analysis, and expertise to deliver true causal insights.

What are the different types of incrementality testing?

Incrementality testing includes several methods like:

- Geo-testing. Comparing results across geographic regions. Geo-testing shows how performance differs in places exposed to advertising versus those that are not.

- Known audience testing. Using first-party data to segment users. This allows advertisers to measure the incremental impact of ads on defined customer groups.

- Conversion lift. Studies offered by ad platforms. These (flawed) tests evaluate whether ads directly cause more conversions compared to a control group.

- Observational or natural experiments. Analysis of real-world changes. This approach looks for natural variations in exposure and outcomes to estimate incremental impact.

Why is incrementality testing considered more complex or demanding than A/B testing?

Incrementality testing typically requires larger sample sizes, more extensive data analysis, and specialized expertise to set up and interpret correctly. This is because it aims to measure the causal impact of an entire campaign against a control group, demanding rigorous experimental design.

Unlike A/B tests for quick creative feedback, incrementality tests often involve significant strategic changes, necessitating robust data and setup.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)