The Meta Report: Lessons from 640 Haus Incrementality Experiments

An exclusive Haus analysis show Meta is incremental in most cases — but is the platform's move toward automation improving incremental efficiency?

Tyler Horner, Solutions Engineering Lead

•

Jul 28, 2025

Background

When I joined a boutique agency and launched my first Facebook ad in 2017, the optimal buying strategy on the platform looked something like this: Test as many audiences and ad permutations as possible, prune bottom performers, repeat.

Our accounts were so unwieldy that we had to launch all new ads in bulk sheets via the notorious (and now-deprecated) Power Editor, uploading as many ads as we could in one go without erroring the system out – usually around 200 to 400 each times at least a half-dozen sheets. Needless to say, I stayed late on my first day… and many more after that.

During this period, your advantage was your ability to test, learn, and iterate at scale. Nobody internally or in the industry at large was talking about consolidation or simplification – though that would soon change.

Meta’s Era of Automation

As it often goes with Meta, an algorithm update (circa 2018) inspired the first in a series of events that would drive our agency and the industry at large to steadily relinquish control of our campaigns to the machine.

As we began restructuring accounts to combat waning performance, it became clear that signal density was now essential. Within the span of a year, accounts with hundreds of campaigns slimmed down to fewer than 10. Broad audiences with essentially no targeting were, in some cases, outperforming the cavalry of lookalikes. Gluttonous ad sets stuffed with the maximum 50 ads were now on a 5-ad diet – any new ads would have to prove themselves in separate “testing” campaigns before getting their shot at the big leagues.

Eventually, the still misunderstood “learning phase” made its debut, herding any remaining stragglers to our new reality.

Fast-forwarding to the present day, we find ourselves at the logical conclusion of this movement – Advantage+ Sales Campaigns (historically Advantage+ Shopping Campaigns, or ASC), the primary instrument of automation Meta built in response to iOS14 and ATT, are set to become the exclusive sales campaign type on the platform for most advertisers. Advantage+ was designed to automatically manage the things buyers used to do manually – budget management, creative testing, and audience targeting. With this update, “you no longer need to choose between running a manual or Advantage+ campaign”.

Advertisers will still be able to dial down the automation and wrest back control, but that begs the question: Is it worth continuing to resist or is it time to fully embrace Meta’s suite of AI-driven advertising tools? At the end of all this automation, are brands actually better off?

Over the last 18 months, Haus customers have been rigorously testing to find their own answers to this very question. Since the start of 2024, they’ve collectively run 640 Meta incrementality tests using the Haus platform, including head-to-head tests comparing Advantage+ to Manual campaigns.

We’re here today to bring you a sampling of the insights we gleaned from this invaluable dataset, aggregated across hundreds of industry-spanning brands who set out to learn for themselves. There is no one-size-fits-all solution here, but we hope this data provides you with guidance and unique color in navigating this era of automation.

Insights from 640 Meta incrementality tests

Understanding the data

Before we get into it, here are a few notes on the test data and terminology in this research:

- The average test in this study ran for 18.6 days with an 8.8-day post-treatment observation window (PTW) for a total test duration of approximately 27.4 days. Upper-funnel tests ran for slightly longer on average (34.4 days).

- An incrementality factor (IF) is the ratio between the amount of incremental sales a channel drives relative to the sales reported in-platform. Unless otherwise noted, for purposes of IF calculations, we will refer to a 7-day click, 1-day view attribution window.

- iROAS = Incremental Return on Ad Spend. Unless otherwise noted, we are referring to DTC-only iROAS including the PTW.

- We will refer to non-Advantage+ campaigns as Manual campaigns.

- The average advertiser in this study spends $14 million annually on Meta. Advertisers span all major verticals.

Now that that’s out of the way, the fun stuff…

When it comes to incrementality, Meta delivers

Let’s get the easy one out of the way first. Love it or hate it, Meta works and there’s a reason why so many brands’ portfolios are highly dependent on it.

Across the account-wide studies included in this analysis, Meta drove on average 19% lift to the brand’s primary KPI (e.g. Total Revenue, New Customers). That means nearly ⅕ of their most critical business metric would be wiped out nearly immediately without Meta ads.

Out of the 100 highest-lift experiments ever run on Haus, Meta represents 77 of them. It also holds the record for any single-channel test on Haus at 74% lift.

Furthermore, for advertisers using click-only performance, Meta actually under-reports incrementality – by 15% on average for 7-day click in-platform attribution (DTC-only).

In most cases, there’s no questioning whether Meta is incremental – something we don’t see across every channel. But incrementality isn’t enough. In order to make optimal decisions, you must understand incrementality relative to spend (i.e. incremental efficiency). This varies from business to business and must be tested to know for sure.

The relevant question then is how Meta’s AI-driven targeting tools are affecting its incremental efficiency — something we’ll dig into shortly.

Meta acts fast

Many of the upper-funnel channels we rely on have a similar profile: an extended ramp-up to incremental impact, then lingering effects after the campaign. But this description doesn't fit Meta.

96% of account-wide studies on the channel detected significant lift by the midpoint of the experiment, and the average lift in post-treatment iROAS was +26%, a figure that aligns more closely with the data we see for search campaigns than other social or video channels.

The benefit is that, if you’re simply setting out to prove that Meta works, most brands shouldn’t need more than one or two weeks to do so.

Meta’s impact extends beyond DTC

Later we’ll dive more into the nuances of omnichannel impacts by tactic, but, if you’re an omnichannel business, Meta is not only impacting your DTC sales.

Across brands doing at least 25% of their business outside of DTC (e.g. Amazon, physical retail), approximately 32% of Meta’s impact went to those non-DTC channels’ top line. Keep this in mind if you’re targeting break-even iROAS on Meta – the average omnichannel brand would only need a 0.68x DTC iROAS to break even across all sales channels.

Everything you need to know about Advantage+

Advantage+ launched in 2022 as Meta’s latest campaign type, touting a new machine learning model that was better equipped to leverage a brand’s historical account data to more quickly identify high-intent users and hasten the learning phase. Let’s explore how this is playing out for brands.

Advantage+ identifies intent more quickly, but lags slightly behind on incremental efficiency and funnel-building

By 2024, 93% of brands adopted Advantage+ — and on average it accounted for 39% of each brand's total ad account spend. Based on platform data, the campaign was performing well, driving a 2.4% higher ROAS on average compared to Manual campaigns, albeit at a lower scale.

One might have expected advertisers to continue scaling these campaigns based on this data, but a year later, these figures have not changed: There has been no increase in the portion of brands leveraging Advantage+ and its share of total ad spend is flat. One potential reason? Lower incrementality.

Despite stronger platform-reported performance, 58% of brands saw higher iROAS on Manual campaigns than Advantage+ in head-to-head tests. On average, Advantage+ drove 12% lower DTC iROAS at 18% lower daily spend.

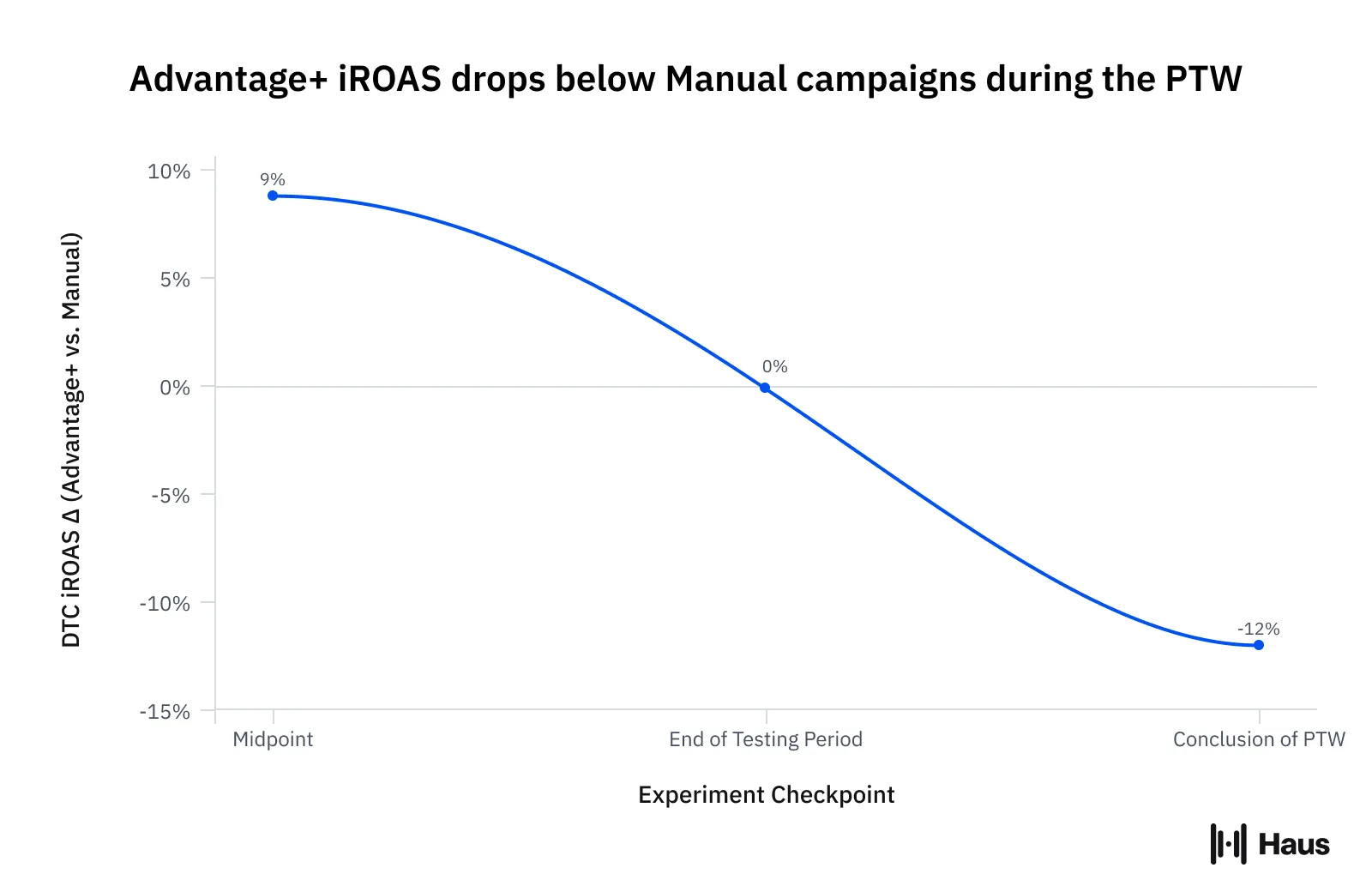

Much of this performance discrepancy comes from the tendency of Advantage+ to drive more immediate impact but less lagged impact. Advantage+ outperformed Manual campaigns by 9% on average at the experiment midpoint before falling even at the end of the testing period and behind following the PTW; the average PTW lift across all Advantage+ tests was +17% vs +32% for Manual campaigns.

Taking into account this post-treatment period, Advantage+ over-reported relative to Manual campaigns by 12 percentage points on average. That means for every $100 of revenue the platform reports, Manual campaigns deliver roughly $12 more revenue to the business than Advantage+, on average. This bias is likely the result of an intent identification model that works a bit too well.

Advantage+ is still an essential new-customer acquisition tool

The data above might read like an indictment of Advantage+ at first glance, but that’s not our takeaway. This data should help you better understand how this campaign type operates, not categorically define it as good or bad.

Remember, for 42% of brands in the study, Advantage+ outperformed Manual on an iROAS basis; For 39% of brands, it gets the majority of their Meta budget. To put it simply, while Manual campaigns perform better on average, there are still a lot of brands that have benefitted from the introduction on Advantage+.

To clear up another potential misconception, Advantage+ drove a slightly higher share of its impact on new customers than Manual setups – 70% vs 65%. Share of spend on retargeting was essentially flat (~15%).

Haus experiment data tells a clear story here: While Advantage+ excels at identifying high-intent users, potentially at the expense of its own incrementality, it is not simply a tool for smuggling site visitors and existing customers into prospecting campaigns.

Used correctly, Advantage+ has a valuable role to play for nearly all brands. We’ve seen it win out for brands across a wide swath of verticals – subscription, cosmetics, apparel, home goods, accessories, and more. And even if you find Manual campaigns outperform Advantage+ during evergreen periods (as our data shows most will), its ability to quickly identify intent and ramp could make it an asset for your brand during short promotional periods or holidays.

Balancing campaign types isn’t necessarily best

Absent a high-quality incrementality test to point you in the right direction, your instinct based on the above data might be to simply split the difference and allocate 50% of budget to each campaign type. We wouldn’t recommend that.

You’re likely better off optimizing towards where you see performance – while Advantage+ tends to over-report a bit relative to Manual campaigns, if it’s showing much higher in-platform efficiency, it’s probably also delivering better incrementality.

More importantly: Over a dozen brands at Haus have tested moving from either an Advantage+ or Manual-heavy setup (with over 75% of spend in the favored tactic) to a roughly 50/50 balance. On average, brands saw an 18% drop in iROAS by the conclusion of the PTW on a balanced setup relative to a skewed setup.

This is likely why the majority of brands spend at least 75% of their budget on their campaign type of choice rather than splitting up their data in the name of balance. Manual-heavy brands are much more likely to achieve this benchmark – 65% of the time compared to 38% for Advantage+ brands.

In most endeavors, balance is a good thing, but force-fitting it on your Meta setup is fighting against a fickle algorithm that doesn’t care for the sentiment.

Advantage+ drives comparable impact to omnichannel sales

One last note on Advantage+ before we move on to other areas of interest. For all you omnichannel brands out there, you shouldn’t have concerns about the ability of Advantage+ to drive those revered cross-channel halo effects: Among omnichannel brands who tested it head-to-head against Manual campaigns, Advantage+ drove a slightly higher halo effect on non-DTC sales channels (+51% vs +43% for Manual).

Mid-Funnel and Upper-Funnel Testing Insights

So far, we’ve learned that Meta’s advancements in AI-driven tooling (like Advantage+) have shown strength in identifying high-intent prospects, but it’s come at the expense of bringing new people into the funnel, a necessity to maintain funnel health and incrementality long-term. With that in mind, let’s dive into some possible solutions to counteracting the imbalance created by Advantage+.

Mid-funnel optimization is seeing a resurgence in popularity

For a long time, optimizing towards a mid-funnel conversion event (e.g. adds to cart, PDP Views, quiz completions) has been looked at as a last resort in situations where you don’t have enough conversion volume to optimize towards purchases or revenue. But we’re seeing momentum behind a new use case for these optimization events: Balancing the funnel.

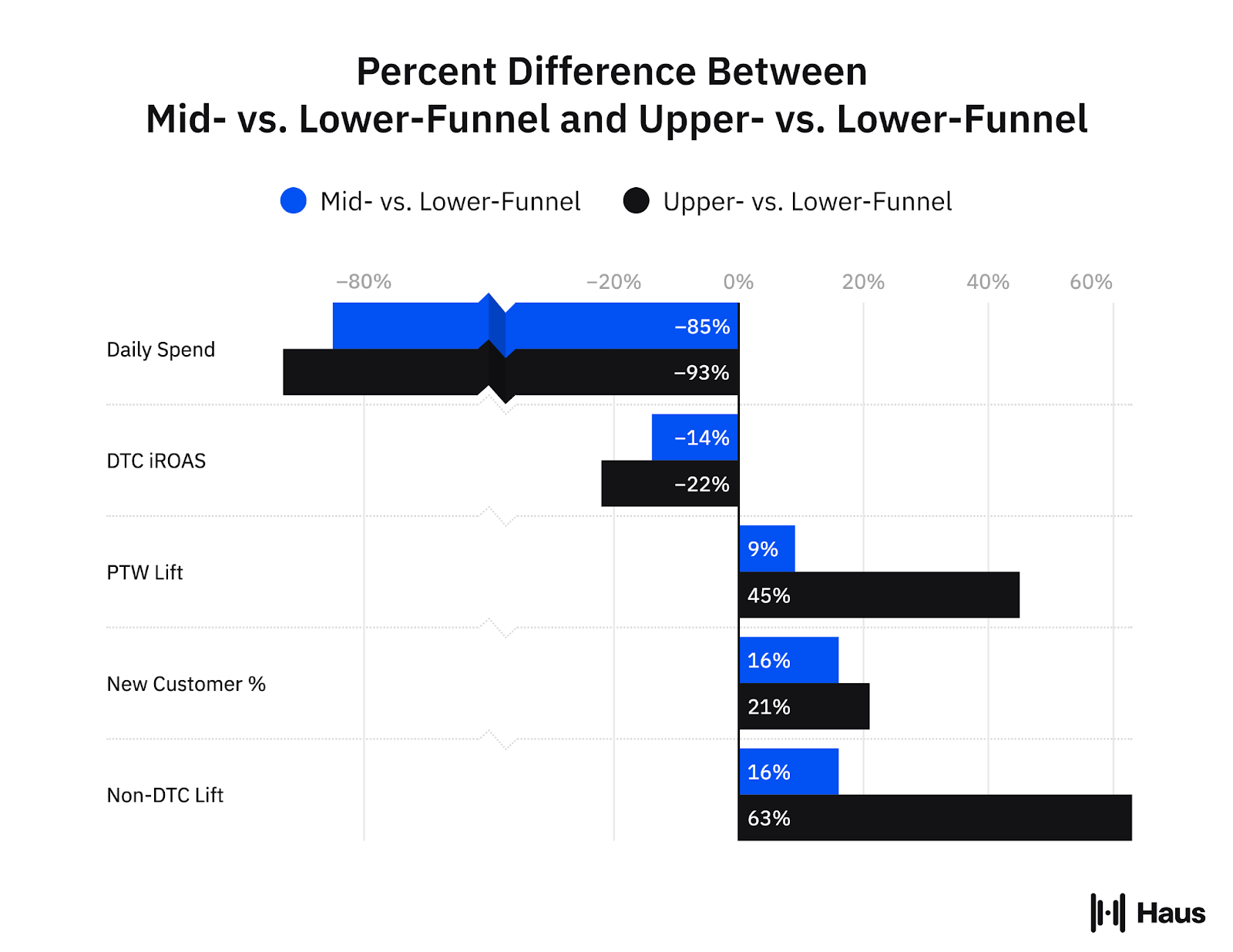

For the average brand, mid-funnel optimization still performs worse than lower-funnel optimization – 14% lower DTC iROAS at 85% lower spend/day. However, mid-funnel campaigns show stronger funnel-building effects (9% higher PTW lift), omnichannel halo effects (+70% vs +46%), and new-customer rates (78% vs 67%).

This may be part of the reason why we’ve seen a +121% spike in mid-funnel optimization test frequency across our customers so far this year.

The historical use case for lower-volume brands also still applies – brands averaging fewer than 5,000 purchase events per week see just 5% lower DTC efficiency from mid-funnel optimization, making it a worthwhile test even for DTC-only brands.

An important reminder about these tests: This is marginal spend on top of the existing purchase-optimized campaigns and an alternative to continuing to drill that same base of users with more frequency – the goal post for these campaigns is therefore beating your marginal, or last-dollar, efficiency on your core conversion campaigns, not your cumulative efficiency across all spend.

Despite stagnating interest, upper-funnel campaigns show promise of funnel-building and omnichannel effects

First, let us define what we mean by upper-funnel: Campaigns with the objective to drive traffic, reach, video views, awareness, or brand awareness. In other words, non-conversion-objective campaigns.

Year-over-year, there has been essentially no increase in the number of Haus customers utilizing Meta upper-funnel campaigns (70%) or in the share of platform spend going to them (6%). It’s likely that some of this stagnation is owed to macroeconomic headwinds, which tend to blunt upper-funnel spend that has a lower immediate ROI. It’s also possible that brands are identifying more exciting places to spend their top-of-funnel budget, like YouTube or CTV, which have seen an explosion in interest.

But is there reason to revisit these campaigns’ potential? Our data suggests there could be.

While upper-funnel optimization is associated with a 46% lower DTC iROAS compared to bottom-funnel campaigns during the testing period, its iROAS is just 22% lower after the post-treatment period. It also drives 21% more of its impact against new customers (81% vs 67%) and the highest omnichannel effects of any Meta tactic – the same set of brands that saw +46% halo effects on Meta conversion campaigns saw +138% benefits from non-conversion-optimized campaigns. Therefore, the average omnichannel brand could measure a 39% lower DTC iROAS on upper-funnel and still see it perform on par with conversion campaigns once omnichannel effects are considered.

The glaring caveat on these promising metrics is that upper-funnel campaigns were tested on just 7% of the daily budget as their conversion-optimized counterparts on average. Nonetheless, if I were managing growth for an acquisition-oriented, omnichannel brand with limited resources to develop CTV and YouTube assets, I’d give this campaign type a look.

Both tactic types are under-reported relative to sales campaigns

Clearly, there is more to learn in regards to the ideal use of mid and upper-funnel testing on Meta. But one thing we can say confidently is that you should expect both campaign types to under-report relative to your lower-funnel campaigns.

Below are the average ratios between the incrementality factors brands saw on these tactics relative to lower-funnel campaigns (calibrating against a 7-day click, 1-day view window):

- Mid-funnel optimization: 1.3x

- Traffic optimization: 2.4x

- Reach and awareness optimization: 6.0x

For example, if your IF on lower-funnel is 1.0x, that means $100 in platform-reported revenue would correlate to $100 of incremental revenue to the business. For the same brand, $100 in platform-reported revenue on reach campaigns might translate to $600 in incremental revenue.

Incremental Attribution represents hope despite mixed early results

The promise behind Incremental Attribution, Meta’s latest attribution setting, is the ability to “Focus campaigns on outcomes that are more likely to be directly driven by an ad.” If Meta can deliver on this, it would be an enormous boon both for them and for advertisers.

As we’ve already discussed at length, the key issue with AI-driven products like Advantage+ is that it identifies likely buyers so well it can undermine its incrementality in the process. If the algorithm could effectively distinguish those likely to buy without an ad vs those who are only likely to buy with an ad, you would theoretically get the best of both worlds.

For now, at least, it remains just theory. As Incremental Attribution enters general availability, its success rate in Haus tests against standard attribution settings is 43% – far from a home run, but against a limited sample to be sure.

Until we see more data, there’s reason to remain skeptical – causal inference has not historically been a strong point for machine learning models and the research is still early in its development.

That said, if anyone can make it work, it’s Meta. They sit on what is almost certainly the largest database of conversion lift tests on the planet and, as we’ve discussed now ad nauseum (excuse the pun), have built one of the most robust algorithms for personalized ad delivery. If Meta can harness the intent-identification capabilities of Advantage+ with enhancements that improve incrementality, it would make their platform even more powerful for advertisers.

What brands should do now

If you’ve made it this far, you probably need a bathroom break. When you come back, check out these suggestions for what you should and shouldn’t do based on this data:

- Meta is a highly incremental channel – for most brands, the question is more about how to optimize advertising on the platform for efficiency than whether Meta drives lift to the business.

- Test into the ideal balance between automation (Advantage+) and Manual controls – every brand sees different outcomes and there is risk in making assumptions here.

- If you’re an omnichannel brand, the testing burden is even higher – Meta tactics’ omnichannel impacts are not well-correlated with their DTC efficiency.

- Mid- and upper-Funnel campaigns provide key levers to balance your funnel and drive omnichannel impact – test into them with a small budget at first and don’t expect to see the same in-platform efficiency.

- Incremental Attribution is worth a test – while it hasn’t yet achieved best-practice status, the more data and feedback Meta is provided, the more opportunity this tool will have to improve.

- Don’t forget about creative – account structure and campaign objectives are just one piece of the battle. Creative is arguably the top lever for driving incrementality.

The insights above are just the tip of the iceberg – we’re looking forward to diving into other Meta-specific questions we got from the community on topics like retargeting, bid strategies, consolidation, and creative testing. Keep an eye out for future updates here!

Final reflections

Here at Haus, we don’t take the act of sharing aggregated insights like those above lightly. The data we’re dealing with can be messy – brands from disparate industries running tests of varying holdout sizes throughout different periods of the year. In short, meta-analyses are hard, but we remain committed to bringing you nuanced, critical analysis you can rely on and never cherry-picking data points for the sake of a catchy headline.

While the result of this analysis was not a conclusive embrace of AI-driven campaign automation, we’ve come a long way from the days of sprawling ad accounts and hours-long ad rollouts – something we can all be thankful for. And if Meta’s recent Performance Marketing Summit is any indication of what’s to come, there should be a lot of new things to test out in the coming months.

If you’re looking for a measurement partner to help you answer these questions, we would love to have a conversation about whether Haus is a fit.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)