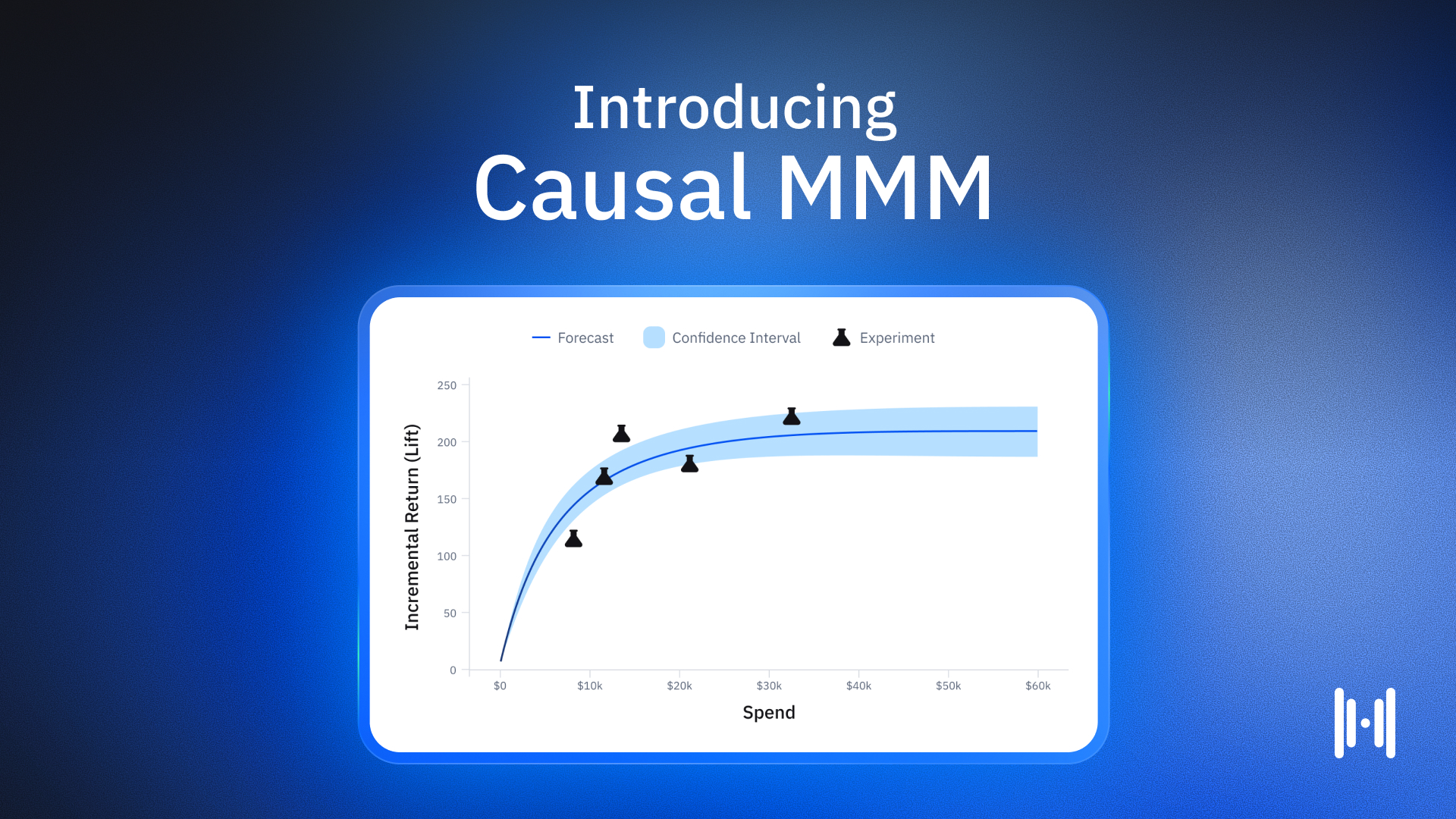

Only experiments — not models — can truly show causality. While marketing mix models (MMM) and multi-touch attribution (MTA) try to give credit across channels, they're prone to bias and only measure correlation.

"In the marketing world, a model is just a really powerful linear regression tool to understand correlation," explains Haus Measurement Strategy Lead Chandler Dutton. "You increase spend on one channel, then sales go up. A model thrives on these relationships between a single variable and a single outcome."

But that's not how most businesses operate.

"From a growth marketing perspective, if you’re increasing spend, you’re probably increasing spend on multiple channels at once," says Chandler. "So if sales are going up, and you're increasing spend on Channel A and Channel B, your model won't be able to untangle which channel is causing the increase in sales."

Meanwhile, a well-designed experiment isolates the effect of a specific marketing tactic or channel, giving you "ground truth" you can actually trust. For instance, say a retail brand runs display ads across multiple publishers. They turn off ads for a randomly selected group of customers (the control group) and keep the campaign running for others (the treatment group). Then they compare outcomes between the two groups. If the treatment group outperforms the control, that difference shows the true incremental impact of those display ads — no multicollinearity to muddy the waters.

Now let's dive a bit deeper on how incrementality experiments work and the impact they can have on your business.

What is an incrementality experiment?

An incrementality experiment is a scientific method used to measure the true causal impact of a marketing tactic or channel. Unlike models that only show correlation, incrementality experiments reveal causality by comparing the performance of a treatment group (exposed to marketing) against a control group (not exposed) that is randomly selected. The measured difference between these groups represents the true incremental impact of the marketing activity, free from attribution bias or data overlap across platforms.

How are incrementality experiments different from A/B experiments?

While both involve comparing groups, incrementality experiments specifically measure the causal impact of a marketing tactic by comparing a group exposed to that tactic against a control group receiving no exposure. The key question is: "Would these outcomes have happened anyway without this marketing activity?"

A/B tests typically compare two different versions of something (like different ad creatives or landing pages) to see which performs better, but both groups still receive some form of the marketing treatment. Incrementality experiments measure the absolute value of a marketing activity compared to doing nothing.

Not all incrementality experiments are created equal

While incrementality experiments beat other methods for revealing causal impact, their validity depends on how rigorous your methodology is. Three things define the strength of any incrementality test: Accuracy and precision, transparency, and objectivity.

Accuracy and precision come down to sample selection, randomization, and measurement. If your campaign test group isn't truly comparable to your control group — or if your sample size is too small to detect meaningful differences — your results won't reflect reality. Picture this: A travel company tests a new paid search strategy only on weekends, while measuring the control group on weekdays. The confounding variable of day-of-week behavior could totally distort results.

Transparency means being clear about how you designed the experiment, what you measured, and which business questions you were trying to answer. If a direct-to-consumer brand runs overlapping experiments across paid social and influencer marketing, documenting which customers see each tactic is essential for interpreting overlapping effects.

Objectivity requires sticking to hypothesis-driven testing rather than cherry-picking positive results. Say a consumer packaged goods (CPG) company tests a new loyalty program but only reports the most favorable metrics — or dismisses unfavorable outcomes. Those findings become worthless. Objective, pre-registered experiments avoid these pitfalls; they drive decisions with data rather than hope or bias.

What makes incrementality experiments valid and reliable?

Three key characteristics determine the strength of incrementality tests:

- Accuracy and precision: Proper sample selection, randomization, and measurement protocols ensure groups are truly comparable and sample sizes are large enough to detect meaningful differences.

- Transparency: Clear documentation of experimental design, metrics, and business questions allows proper interpretation of results.

- Objectivity: Strict adherence to hypothesis-driven testing prevents cherry-picking favorable results, ensuring decisions are based on data rather than bias.

How do you conduct an incrementality test?

To conduct an incrementality test:

- Define a clear hypothesis about what you want to measure

- Randomly divide your audience into treatment and control groups

- Expose only the treatment group to the marketing activity being tested

- Measure the outcomes for both groups

- Calculate the difference in performance between the groups to determine the incremental impact

For example, a retail brand might turn off display ads for a randomly selected subset of customers (control group) while continuing the campaign for others (treatment group). The difference in performance reveals the true impact of those display ads.

Incrementality as a continuous practice

One-off tests give you snapshots, but you'll get the most value from incrementality when you adopt experimentation as an ongoing, high-velocity discipline. Customer behavior, competitive dynamics, and channels all evolve — so point-in-time experiments can quickly become outdated. That's why incrementality should be a continuous practice, always testing new variables, hypotheses, and marketing stimuli.

"For example, we've seen some brands experience softer incrementality during heavy promotional periods," says Haus Measurement Strategist Tarek Benchouia. "This indicates that customers were more driven to purchase by the promotion itself, rather than by paid media. However, other brands see certain promotions drive strong incremental lift (when a gift with purchase is advertised, for example). In these scenarios, paid media actually pulls forward customers more effectively than in BAU times.

"For your brand, the only way you can truly know how a promotion impacts the incrementality of paid media is to run experiments in both BAU and promotional points in time. Once you understand this, you can adjust your spend and GTM strategy to better maximize the impact and efficiency of your marketing dollars."

When you sustain the cadence of experimentation, you're always learning and optimizing for maximum impact. But continuous experimentation isn't just about learning — it's about actionable learning. Each result should directly inform campaign optimization, channel allocation, or creative development. More frequent testing means faster iteration and ultimately smarter, more responsive decision-making.

How frequently should incrementality experiments be conducted?

Incrementality should be treated as a continuous practice rather than a one-time event. Customer behavior, competitive dynamics, and channels constantly evolve, making point-in-time experiments quickly outdated. Organizations should maintain a high-velocity experimentation program, constantly testing new variables, hypotheses, and marketing stimuli to ensure insights remain current and actionable.

Incrementality is unique to your business

There's no universal blueprint for incrementality. Every business operates in its own distinct context, with its own customers, products, seasonality, and media mixes. Benchmarks or case studies from other brands can provide inspiration, but they're not substitutes for direct experimentation. What works for an apparel retailer might prove ineffective — or even counterproductive — for a fintech startup.

"That's why we always take your business's path to conversion into account when designing a testing roadmap," says Haus Measurement Strategist Victoria Brandley. "For instance, brands with higher AOV products and longer paths to conversion should set up longer tests so we can capture that full customer journey in the test and post-treatment window."

To maximize value, you should start by identifying critical business questions — such as "Does scaling paid social outperform affiliate incentives in acquiring high-LTV users?" — and design incrementality tests to answer them. Over time, these learnings become a competitive advantage, giving you insights your rivals don't have.

Can incrementality learnings from one business be applied to another?

While case studies can provide inspiration, incrementality insights are highly specific to each business's unique context, including its customers, products, seasonality, and media mix. What works for one company might be ineffective or counterproductive for another. Each business should build an experiment roadmap tailored to its specific questions and conditions rather than relying on external benchmarks.

Acting on incrementality improves your business

Incrementality experiments aren't just a research exercise — they should inform major business processes like reporting, goal-setting, and budget allocation. When you ground decisions in incrementality, you'll drive better financial performance and improve collaboration between marketing and finance.

Take a national restaurant chain that runs incrementality experiments across search, display, and loyalty channels. If experiments show that a significant portion of conversions attributed to branded search would've happened anyway (low incrementality), the brand can shift budgets toward channels with higher proven incremental impact. Over time, this discipline improves the company's profit and loss (P&L) by reducing wasteful spend and prioritizing what truly works.

Plus, when you quantify the real business lift from marketing investments, incrementality experiments help marketing and finance teams align. You can credibly defend budget requests based on rigorous experimental evidence, while finance gains confidence that dollars go toward proven causal impact, not just correlation.

How should businesses act on incrementality findings?

Incrementality findings should inform major business processes including reporting, goal-setting, and budget allocation. Companies should shift budgets toward channels with higher proven incremental impact and away from those showing low incrementality. This approach improves financial performance by reducing wasteful spend and prioritizing what truly works. Additionally, incrementality experiments facilitate alignment between marketing and finance teams by providing rigorous evidence of marketing's impact.

Real-world examples

Consider a national grocery chain debating whether to invest in programmatic display or digital coupons. They randomly assign a set of markets to receive digital coupons and withhold them in similar markets. Markets with coupons see a 5% lift in total basket size compared to controls. When they run a similar incrementality experiment with programmatic display, the uplift is only 1%. This empirical evidence supports investing in digital coupons over programmatic display.

In another scenario, a mobile gaming company suspects that retargeting lapsed users through social ads might cannibalize organic re-engagement. By withholding social ads from a randomly selected user segment and comparing reactivation rates, they find minimal difference — suggesting paid re-engagement provides little incremental value. With this insight, they reallocate that budget to user acquisition, improving cost-effectiveness.

Best practices for successful incrementality experiments

Making sure your experiments are valid requires thoughtful planning. Define hypotheses clearly before testing, maintain robust randomization, and document every step for future reference. Regularly challenge assumptions and revisit experiments over time — what holds true in one quarter may change as market conditions evolve. Don't draw broad conclusions from a single test, and always watch for externalities like seasonality or competitive promotions.

Companies committed to continuous incrementality experimentation foster a culture of curiosity, data-driven rigor, and adaptive learning. This discipline transforms marketing from a cost center sustained by faith into an ROI engine steered by empirical evidence.

What are some best practices for successful incrementality experiments?

Key best practices include:

- Clearly defining hypotheses before testing

- Maintaining robust randomization procedures

- Documenting every experimental step for future reference

- Regularly challenging assumptions and revisiting experiments over time

- Avoiding broad conclusions from single tests

- Being aware of externalities like seasonality or competitive promotions

- Fostering a culture of curiosity and data-driven rigor

- Using findings to directly inform optimization and strategy

The bottom line

Incrementality experiments represent the most reliable approach for figuring out which marketing actions truly move the needle. When you prioritize experimental evidence over model-based guesswork, you gain a transparent, objective, and accurate understanding of channel impact — sharpening both day-to-day tactics and long-term strategy.

With robust, ongoing experimentation tailored to your specific business conditions, you'll unlock smarter decision-making, stronger financial outcomes, and tighter alignment between marketing and finance. In a world drowning in metrics, incrementality experiments are your compass pointing the way forward.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)