Causal Intelligence, Explained: How AI Powers Incrementality Testing at Haus

Haus is built on AI and machine learning that strengthens the speed, accuracy, and reliability of incrementality tests.

Tyler Horner, Manager, Solutions Consulting

•

Jan 8, 2026

.png)

For many of us, models and artificial intelligence (AI) can seem like total black boxes. You supply the inputs, the models take over, and then you get an output – but what happens in the middle is basically a mystery that you have zero insight into or control over.

Sound familiar?

We value transparency and the process of learning… a lot. It’s important to us as both consumers and decision makers in this world, as well as stewards of the Haus brand and business. So we figured we’d open the black box, if you will, on how Haus uses models and AI in our products and services.

You’ll see us reference Causal Intelligence – or cAI – throughout this explainer. Think of Causal Intelligence as the sum total of how we use AI at Haus. It’s our proprietary approach to embedding advanced AI and machine learning throughout our product suite and technology – and we’d love to show you what makes it special (and, most importantly, useful).

A little background on Haus science

This is relevant to the conversation – we promise.

Haus was built to measure the incremental gains of paid media investments and marketing activations through the power of experiments. Our initial premise was: What data can I actually trust? Did this activity cause this outcome? We assembled a team of some of the best causal inference and econometrics experts in the world to help build this platform.

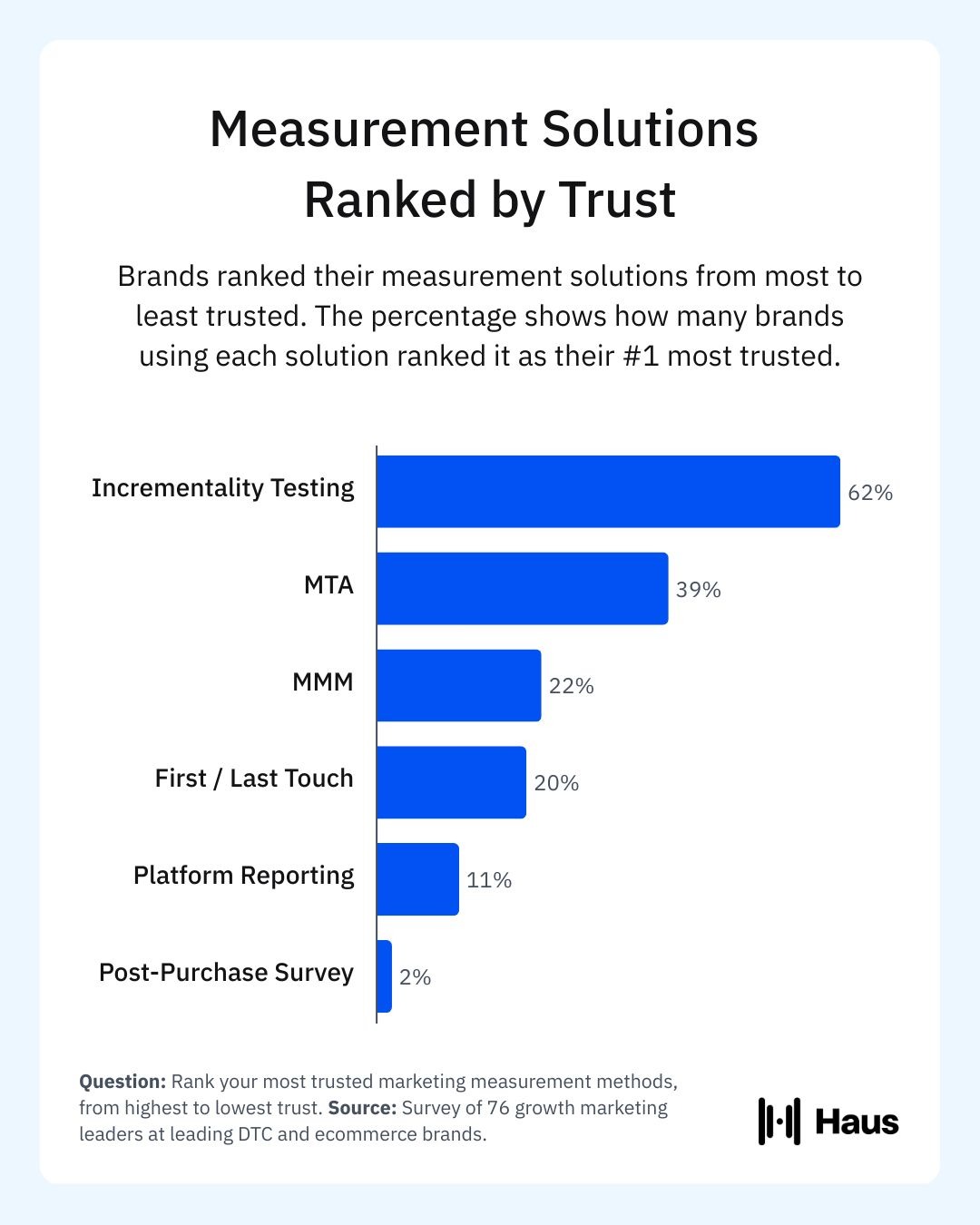

For context, click-based marketing attribution is often misleading and inaccurate – not that you needed us to remind you. Attribution can miss conversions influenced by upper-funnel channels where digital fingerprinting is spotty, like YouTube, CTV, and OOH. It doesn’t capture the effect of marketing on retail sales, and it can also over-report when digital campaigns find customers who were already incrementally influenced by another campaign. With these measurement blind spots come serious trust issues. In our 2025 industry survey, only 39% of marketers identified MTAs as their most-trusted measurement solution, and only 20% identified first-or-last-touch attribution as their most-trusted measurement solution.

In other words: Skepticism towards traditional attribution tools runs rampant.

So rather than being based on clicks or other traditional means of attribution, Haus is based on real-world experiments: We help you create a test group and a holdout group, and then measure the lift (or lack of lift) between the two. We don’t create a model that “predicts” impact. Instead, you’re in the driver’s seat of designing your experiments, and can control power and precision based on your business goals and level of comfort.

That’s at the heart of our incrementality experiments. But woven into this is, of course, AI. For example, you can use Haus Copilot to help you in the experiment design phase. And behind the scenes, AI is enhancing the speed, accuracy, and precision of these experiments with features like Causal Intelligence-powered placebo tests, synthetic controls, outlier detection, and anomaly handling.

Let’s get into it.

Haus incrementality tests are your real-world experiments

To underscore the point: When it comes to classic, geo-based incrementality experiments, Haus doesn’t create a simulation that “predicts” impact. When you set up an incrementality experiment through the Haus app, you are setting up a geo test that will run in the real world – with complete control over the power and precision of your test.

(Note: Time Tests – used to measure big brand moments that aren’t geo-segmentable into treatment and control groups – work a bit differently, but we’ll save that explainer for another time.)

.png)

Power is the likelihood of detecting a positive lift when there is lift to be detected. At Haus, we recommend choosing a holdout size and test duration that achieves at least 80% power. This means that out of 100 different studies, 80 out of the 100 tests will successfully identify effects.

Precision represents the level of uncertainty in the eventual lift percentage estimate. Generally, you can expect an improvement in precision with a larger holdout, longer test duration, and stable historical data.

Those are the basics – but we get that it’s likely been a minute since your last statistics class. That’s where Haus Copilot comes in.

Use Haus Copilot to help answer questions, summarize test results, and partner on problems

While you have control over your test design, we recognize not every marketer is a data scientist or economist. And if you’re in that camp, that’s where Haus Copilot can lend a hand.

Haus Copilot learns from historical data, suggests optimal test setups, and provides real-time, context-aware recommendations to you every step of the way. Use it to help answer questions about test design or feature functionality, summarize test results, and think through challenging problems. For example, you can ask Haus Copilot questions like:

- What are the tradeoffs between DMA and State granularity?

- How should I pick a primary KPI?

- How do I evaluate my iROAS results?

- Can you help me create an experiment hypothesis?

Haus Copilot accelerates education, reduces friction, and enables you to act independently without having to pull in your lovely-but-busy data scientist friends.

Behind the scenes, Haus uses AI to enhance the speed, accuracy, and precision of incrementality experiments

While Haus Copilot is easy to see front and center, Haus’ Causal Intelligence-powered placebo tests, synthetic controls, outlier detection, and anomaly handling execute on scientific rigor behind the scenes. Here’s how they work and why they’re important.

cAI Placebo Tests

Remember power and precision from earlier? If you were wondering what informs those values, the answer is cAI placebo tests.

Haus uses iterative learning to run hundreds (sometimes thousands) of pre-launch placebo tests on historical data for every experiment. We do this to figure out inherent noise for each KPI. cAI placebo testing validates causal claims, reduces the risk of bias or false positives, and provides ongoing quality assurance for data integrity.

cAI-powered, pre-launch placebo tests train on a brand’s historical data (we call this in-sample data), and hold out for testing some of the data that it hasn’t been trained on (we call this out-of-sample data). We do this to assess how well models perform on “new” data to confirm they don’t overfit.

(Real talk: Designing models with historical data isn’t uncommon, but testing these models against data the model hasn’t seen before is rare. It’s an easy corner to cut, and can potentially expose weaknesses in the test design. We believe it’s mission-critical to validating that what you’re doing will result in estimates you can trust.)

This cAI-powered process iteratively improves the selection and matching of control and test groups, validating causal claims and elevating scientific rigor – so you can trust the experiment was designed and run as rigorously as they come.

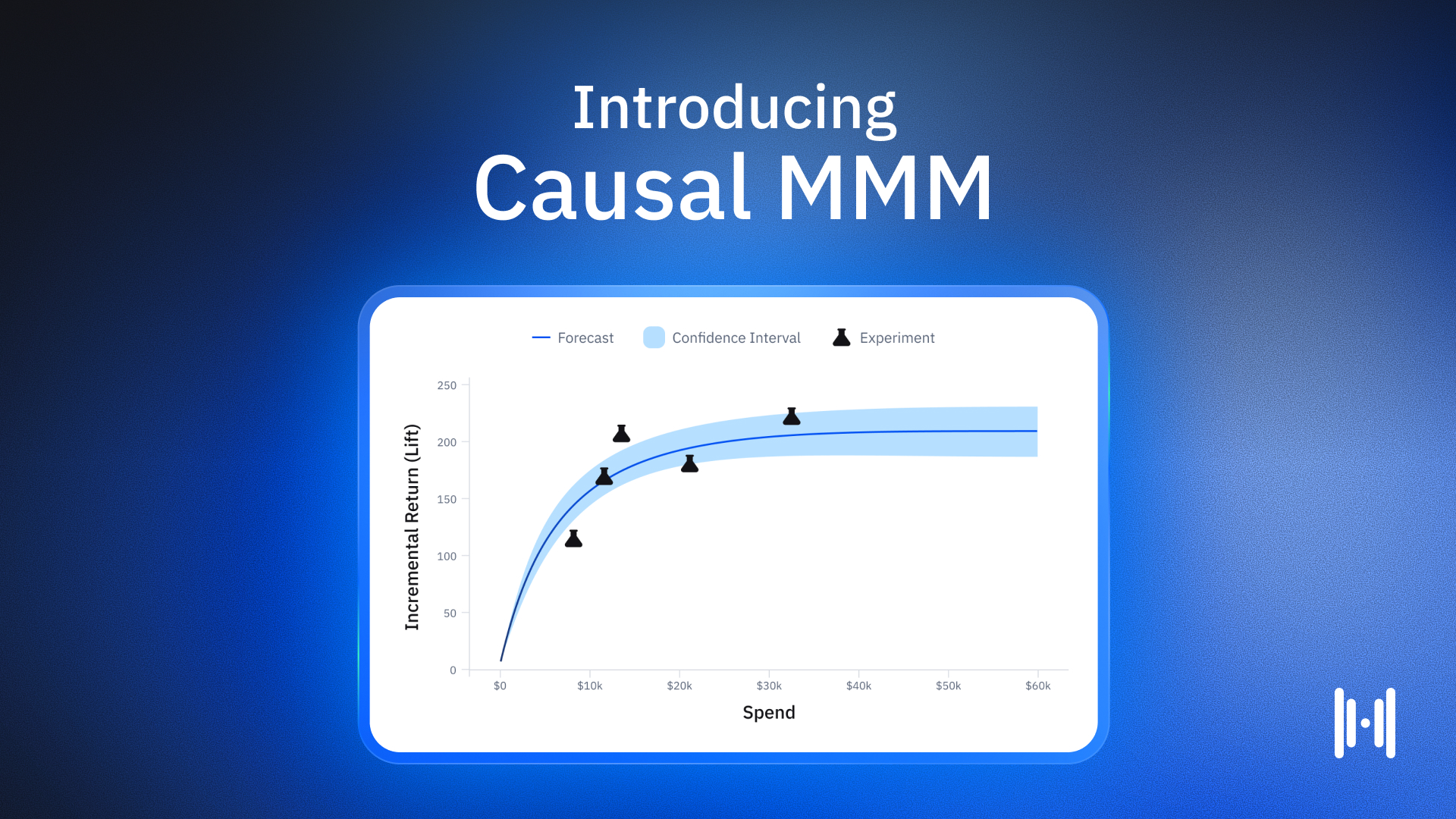

cAI Synthetic Controls

cAI-powered synthetic controls are used in GeoLift and Fixed Geo Tests – in both the design (creating your experiment) and analysis (interpreting your experiment results) phases.

cAI builds synthetic control groups for every experiment, establishing more accurate counterfactuals than traditional methods and enhancing the causal applicability of experiment results. This saves data scientists hours of manual work and delivers unmatched accuracy, enabling faster, more confident decisions rooted in causality.

cAI Outlier Detection and Anomaly Handling

cAI automates and expedites advanced outlier detection and anomaly handling, ensuring that rare or extreme values don’t skew campaign insights. We programmatically run experiment results through cAI-powered daily, weekly, winsorized, and non-winsorized analysis for peak precision and pressure testing.

(Not familiar with winsorization? Don’t sweat it. In outlier detection and statistics, winsorizing means replacing extreme values with cutoff values, instead of removing the extreme values altogether.)

This feature provides an unbiased, reliable assessment of marketing impact, with no human bias towards the “best looking results.” This means teams from marketing to finance can trust that their test results will translate to business outcomes.

Real performance you can crack open and verify

At the heart of everything we do is a genuine desire to help brands make the absolute best decisions for their business. We intentionally don’t put our finger on the scale because we recognize that human tampering can translate into bias in results – and ultimately bad decisions. By using Causal Intelligence, we can trace your data through every step of the process and back up every decision it makes, making every outcome reviewable, explainable, and defensible.

As AI hurtles the world forward – including much of the proprietary technology that powers our own testing, measurement, and optimization products – it remains mission-critical to us that what happens in Haus is not just understandable, but verifiable in a brand’s business data.

It can admittedly be a tight rope to walk – but in this wild modern world where so much is simulated, we believe it’s worth understanding the inner-workings of an AI-driven platform that’s based on and verified in reality.

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.avif)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.png)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.webp)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.avif)

.png)

.avif)

.png)

.avif)